Comparison with spreadsheets¶

Since many potential pandas users have some familiarity with spreadsheet programs like Excel, this page is meant to provide some examples of how various spreadsheet operations would be performed using pandas. This page will use terminology and link to documentation for Excel, but much will be the same/similar in Google Sheets, LibreOffice Calc, Apple Numbers, and other Excel-compatible spreadsheet software.

If you’re new to pandas, you might want to first read through 10 Minutes to pandas to familiarize yourself with the library.

As is customary, we import pandas and NumPy as follows:

In [1]: import pandas as pd

In [2]: import numpy as np

Data structures¶

General terminology translation¶

pandas |

Excel |

|---|---|

|

worksheet |

|

column |

|

row headings |

row |

row |

|

empty cell |

DataFrame¶

A DataFrame in pandas is analogous to an Excel worksheet. While an Excel workbook can contain

multiple worksheets, pandas DataFrames exist independently.

Series¶

A Series is the data structure that represents one column of a DataFrame. Working with a

Series is analogous to referencing a column of a spreadsheet.

Index¶

Every DataFrame and Series has an Index, which are labels on the rows of the data. In

pandas, if no index is specified, a RangeIndex is used by default (first row = 0,

second row = 1, and so on), analogous to row headings/numbers in spreadsheets.

In pandas, indexes can be set to one (or multiple) unique values, which is like having a column that

is used as the row identifier in a worksheet. Unlike most spreadsheets, these Index values can

actually be used to reference the rows. (Note that this can be done in Excel with structured

references.)

For example, in spreadsheets, you would reference the first row as A1:Z1, while in pandas you

could use populations.loc['Chicago'].

Index values are also persistent, so if you re-order the rows in a DataFrame, the label for a

particular row don’t change.

See the indexing documentation for much more on how to use an Index

effectively.

Copies vs. in place operations¶

Most pandas operations return copies of the Series/DataFrame. To make the changes “stick”,

you’ll need to either assign to a new variable:

sorted_df = df.sort_values("col1")

or overwrite the original one:

df = df.sort_values("col1")

Note

You will see an inplace=True keyword argument available for some methods:

df.sort_values("col1", inplace=True)

Its use is discouraged. More information.

Data input / output¶

Constructing a DataFrame from values¶

In a spreadsheet, values can be typed directly into cells.

A pandas DataFrame can be constructed in many different ways,

but for a small number of values, it is often convenient to specify it as

a Python dictionary, where the keys are the column names

and the values are the data.

In [3]: df = pd.DataFrame({"x": [1, 3, 5], "y": [2, 4, 6]})

In [4]: df

Out[4]:

x y

0 1 2

1 3 4

2 5 6

Reading external data¶

Both Excel and pandas can import data from various sources in various formats.

CSV¶

Let’s load and display the tips

dataset from the pandas tests, which is a CSV file. In Excel, you would download and then

open the CSV.

In pandas, you pass the URL or local path of the CSV file to read_csv():

In [5]: url = (

...: "https://raw.githubusercontent.com/pandas-dev"

...: "/pandas/main/pandas/tests/io/data/csv/tips.csv"

...: )

...:

In [6]: tips = pd.read_csv(url)

---------------------------------------------------------------------------

ConnectionRefusedError Traceback (most recent call last)

File /usr/lib/python3.11/urllib/request.py:1348, in AbstractHTTPHandler.do_open(self, http_class, req, **http_conn_args)

1347 try:

-> 1348 h.request(req.get_method(), req.selector, req.data, headers,

1349 encode_chunked=req.has_header('Transfer-encoding'))

1350 except OSError as err: # timeout error

File /usr/lib/python3.11/http/client.py:1282, in HTTPConnection.request(self, method, url, body, headers, encode_chunked)

1281 """Send a complete request to the server."""

-> 1282 self._send_request(method, url, body, headers, encode_chunked)

File /usr/lib/python3.11/http/client.py:1328, in HTTPConnection._send_request(self, method, url, body, headers, encode_chunked)

1327 body = _encode(body, 'body')

-> 1328 self.endheaders(body, encode_chunked=encode_chunked)

File /usr/lib/python3.11/http/client.py:1277, in HTTPConnection.endheaders(self, message_body, encode_chunked)

1276 raise CannotSendHeader()

-> 1277 self._send_output(message_body, encode_chunked=encode_chunked)

File /usr/lib/python3.11/http/client.py:1037, in HTTPConnection._send_output(self, message_body, encode_chunked)

1036 del self._buffer[:]

-> 1037 self.send(msg)

1039 if message_body is not None:

1040

1041 # create a consistent interface to message_body

File /usr/lib/python3.11/http/client.py:975, in HTTPConnection.send(self, data)

974 if self.auto_open:

--> 975 self.connect()

976 else:

File /usr/lib/python3.11/http/client.py:1447, in HTTPSConnection.connect(self)

1445 "Connect to a host on a given (SSL) port."

-> 1447 super().connect()

1449 if self._tunnel_host:

File /usr/lib/python3.11/http/client.py:941, in HTTPConnection.connect(self)

940 sys.audit("http.client.connect", self, self.host, self.port)

--> 941 self.sock = self._create_connection(

942 (self.host,self.port), self.timeout, self.source_address)

943 # Might fail in OSs that don't implement TCP_NODELAY

File /usr/lib/python3.11/socket.py:851, in create_connection(address, timeout, source_address, all_errors)

850 if not all_errors:

--> 851 raise exceptions[0]

852 raise ExceptionGroup("create_connection failed", exceptions)

File /usr/lib/python3.11/socket.py:836, in create_connection(address, timeout, source_address, all_errors)

835 sock.bind(source_address)

--> 836 sock.connect(sa)

837 # Break explicitly a reference cycle

ConnectionRefusedError: [Errno 111] Connection refused

During handling of the above exception, another exception occurred:

URLError Traceback (most recent call last)

Cell In [6], line 1

----> 1 tips = pd.read_csv(url)

File /usr/lib/python3/dist-packages/pandas/util/_decorators.py:211, in deprecate_kwarg.<locals>._deprecate_kwarg.<locals>.wrapper(*args, **kwargs)

209 else:

210 kwargs[new_arg_name] = new_arg_value

--> 211 return func(*args, **kwargs)

File /usr/lib/python3/dist-packages/pandas/util/_decorators.py:331, in deprecate_nonkeyword_arguments.<locals>.decorate.<locals>.wrapper(*args, **kwargs)

325 if len(args) > num_allow_args:

326 warnings.warn(

327 msg.format(arguments=_format_argument_list(allow_args)),

328 FutureWarning,

329 stacklevel=find_stack_level(),

330 )

--> 331 return func(*args, **kwargs)

File /usr/lib/python3/dist-packages/pandas/io/parsers/readers.py:950, in read_csv(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, squeeze, prefix, mangle_dupe_cols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, encoding_errors, dialect, error_bad_lines, warn_bad_lines, on_bad_lines, delim_whitespace, low_memory, memory_map, float_precision, storage_options)

935 kwds_defaults = _refine_defaults_read(

936 dialect,

937 delimiter,

(...)

946 defaults={"delimiter": ","},

947 )

948 kwds.update(kwds_defaults)

--> 950 return _read(filepath_or_buffer, kwds)

File /usr/lib/python3/dist-packages/pandas/io/parsers/readers.py:605, in _read(filepath_or_buffer, kwds)

602 _validate_names(kwds.get("names", None))

604 # Create the parser.

--> 605 parser = TextFileReader(filepath_or_buffer, **kwds)

607 if chunksize or iterator:

608 return parser

File /usr/lib/python3/dist-packages/pandas/io/parsers/readers.py:1442, in TextFileReader.__init__(self, f, engine, **kwds)

1439 self.options["has_index_names"] = kwds["has_index_names"]

1441 self.handles: IOHandles | None = None

-> 1442 self._engine = self._make_engine(f, self.engine)

File /usr/lib/python3/dist-packages/pandas/io/parsers/readers.py:1735, in TextFileReader._make_engine(self, f, engine)

1733 if "b" not in mode:

1734 mode += "b"

-> 1735 self.handles = get_handle(

1736 f,

1737 mode,

1738 encoding=self.options.get("encoding", None),

1739 compression=self.options.get("compression", None),

1740 memory_map=self.options.get("memory_map", False),

1741 is_text=is_text,

1742 errors=self.options.get("encoding_errors", "strict"),

1743 storage_options=self.options.get("storage_options", None),

1744 )

1745 assert self.handles is not None

1746 f = self.handles.handle

File /usr/lib/python3/dist-packages/pandas/io/common.py:713, in get_handle(path_or_buf, mode, encoding, compression, memory_map, is_text, errors, storage_options)

710 codecs.lookup_error(errors)

712 # open URLs

--> 713 ioargs = _get_filepath_or_buffer(

714 path_or_buf,

715 encoding=encoding,

716 compression=compression,

717 mode=mode,

718 storage_options=storage_options,

719 )

721 handle = ioargs.filepath_or_buffer

722 handles: list[BaseBuffer]

File /usr/lib/python3/dist-packages/pandas/io/common.py:363, in _get_filepath_or_buffer(filepath_or_buffer, encoding, compression, mode, storage_options)

361 # assuming storage_options is to be interpreted as headers

362 req_info = urllib.request.Request(filepath_or_buffer, headers=storage_options)

--> 363 with urlopen(req_info) as req:

364 content_encoding = req.headers.get("Content-Encoding", None)

365 if content_encoding == "gzip":

366 # Override compression based on Content-Encoding header

File /usr/lib/python3/dist-packages/pandas/io/common.py:265, in urlopen(*args, **kwargs)

259 """

260 Lazy-import wrapper for stdlib urlopen, as that imports a big chunk of

261 the stdlib.

262 """

263 import urllib.request

--> 265 return urllib.request.urlopen(*args, **kwargs)

File /usr/lib/python3.11/urllib/request.py:216, in urlopen(url, data, timeout, cafile, capath, cadefault, context)

214 else:

215 opener = _opener

--> 216 return opener.open(url, data, timeout)

File /usr/lib/python3.11/urllib/request.py:519, in OpenerDirector.open(self, fullurl, data, timeout)

516 req = meth(req)

518 sys.audit('urllib.Request', req.full_url, req.data, req.headers, req.get_method())

--> 519 response = self._open(req, data)

521 # post-process response

522 meth_name = protocol+"_response"

File /usr/lib/python3.11/urllib/request.py:536, in OpenerDirector._open(self, req, data)

533 return result

535 protocol = req.type

--> 536 result = self._call_chain(self.handle_open, protocol, protocol +

537 '_open', req)

538 if result:

539 return result

File /usr/lib/python3.11/urllib/request.py:496, in OpenerDirector._call_chain(self, chain, kind, meth_name, *args)

494 for handler in handlers:

495 func = getattr(handler, meth_name)

--> 496 result = func(*args)

497 if result is not None:

498 return result

File /usr/lib/python3.11/urllib/request.py:1391, in HTTPSHandler.https_open(self, req)

1390 def https_open(self, req):

-> 1391 return self.do_open(http.client.HTTPSConnection, req,

1392 context=self._context, check_hostname=self._check_hostname)

File /usr/lib/python3.11/urllib/request.py:1351, in AbstractHTTPHandler.do_open(self, http_class, req, **http_conn_args)

1348 h.request(req.get_method(), req.selector, req.data, headers,

1349 encode_chunked=req.has_header('Transfer-encoding'))

1350 except OSError as err: # timeout error

-> 1351 raise URLError(err)

1352 r = h.getresponse()

1353 except:

URLError: <urlopen error [Errno 111] Connection refused>

In [7]: tips

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [7], line 1

----> 1 tips

NameError: name 'tips' is not defined

Like Excel’s Text Import Wizard,

read_csv can take a number of parameters to specify how the data should be parsed. For

example, if the data was instead tab delimited, and did not have column names, the pandas command

would be:

tips = pd.read_csv("tips.csv", sep="\t", header=None)

# alternatively, read_table is an alias to read_csv with tab delimiter

tips = pd.read_table("tips.csv", header=None)

Excel files¶

Excel opens various Excel file formats by double-clicking them, or using the Open menu. In pandas, you use special methods for reading and writing from/to Excel files.

Let’s first create a new Excel file based on the tips dataframe in the above example:

tips.to_excel("./tips.xlsx")

Should you wish to subsequently access the data in the tips.xlsx file, you can read it into your module using

tips_df = pd.read_excel("./tips.xlsx", index_col=0)

You have just read in an Excel file using pandas!

Limiting output¶

Spreadsheet programs will only show one screenful of data at a time and then allow you to scroll, so

there isn’t really a need to limit output. In pandas, you’ll need to put a little more thought into

controlling how your DataFrames are displayed.

By default, pandas will truncate output of large DataFrames to show the first and last rows.

This can be overridden by changing the pandas options, or using

DataFrame.head() or DataFrame.tail().

In [8]: tips.head(5)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [8], line 1

----> 1 tips.head(5)

NameError: name 'tips' is not defined

Exporting data¶

By default, desktop spreadsheet software will save to its respective file format (.xlsx, .ods, etc). You can, however, save to other file formats.

pandas can create Excel files, CSV, or a number of other formats.

Data operations¶

Operations on columns¶

In spreadsheets, formulas are often created in individual cells and then dragged into other cells to compute them for other columns. In pandas, you’re able to do operations on whole columns directly.

pandas provides vectorized operations by specifying the individual Series in the

DataFrame. New columns can be assigned in the same way. The DataFrame.drop() method drops

a column from the DataFrame.

In [9]: tips["total_bill"] = tips["total_bill"] - 2

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [9], line 1

----> 1 tips["total_bill"] = tips["total_bill"] - 2

NameError: name 'tips' is not defined

In [10]: tips["new_bill"] = tips["total_bill"] / 2

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [10], line 1

----> 1 tips["new_bill"] = tips["total_bill"] / 2

NameError: name 'tips' is not defined

In [11]: tips

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [11], line 1

----> 1 tips

NameError: name 'tips' is not defined

In [12]: tips = tips.drop("new_bill", axis=1)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [12], line 1

----> 1 tips = tips.drop("new_bill", axis=1)

NameError: name 'tips' is not defined

Note that we aren’t having to tell it to do that subtraction cell-by-cell — pandas handles that for us. See how to create new columns derived from existing columns.

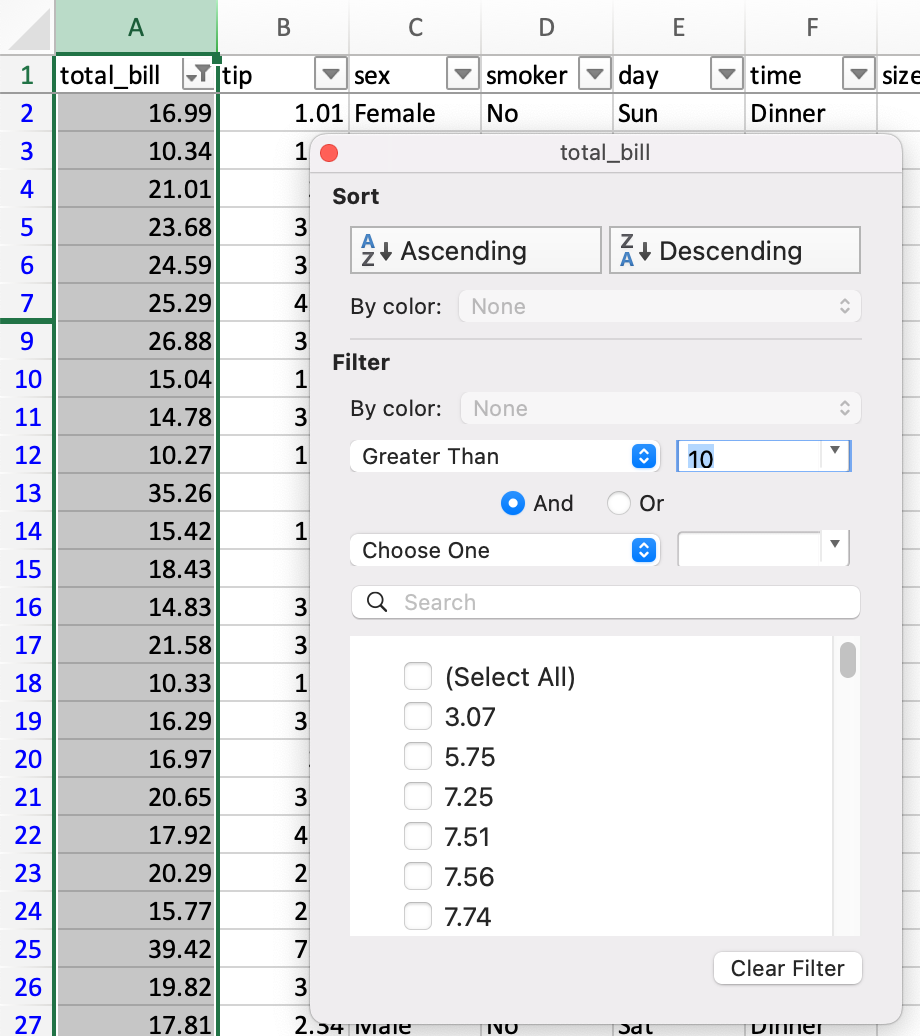

Filtering¶

In Excel, filtering is done through a graphical menu.

DataFrames can be filtered in multiple ways; the most intuitive of which is using boolean indexing.

In [13]: tips[tips["total_bill"] > 10]

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [13], line 1

----> 1 tips[tips["total_bill"] > 10]

NameError: name 'tips' is not defined

The above statement is simply passing a Series of True/False objects to the DataFrame,

returning all rows with True.

In [14]: is_dinner = tips["time"] == "Dinner"

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [14], line 1

----> 1 is_dinner = tips["time"] == "Dinner"

NameError: name 'tips' is not defined

In [15]: is_dinner

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [15], line 1

----> 1 is_dinner

NameError: name 'is_dinner' is not defined

In [16]: is_dinner.value_counts()

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [16], line 1

----> 1 is_dinner.value_counts()

NameError: name 'is_dinner' is not defined

In [17]: tips[is_dinner]

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [17], line 1

----> 1 tips[is_dinner]

NameError: name 'tips' is not defined

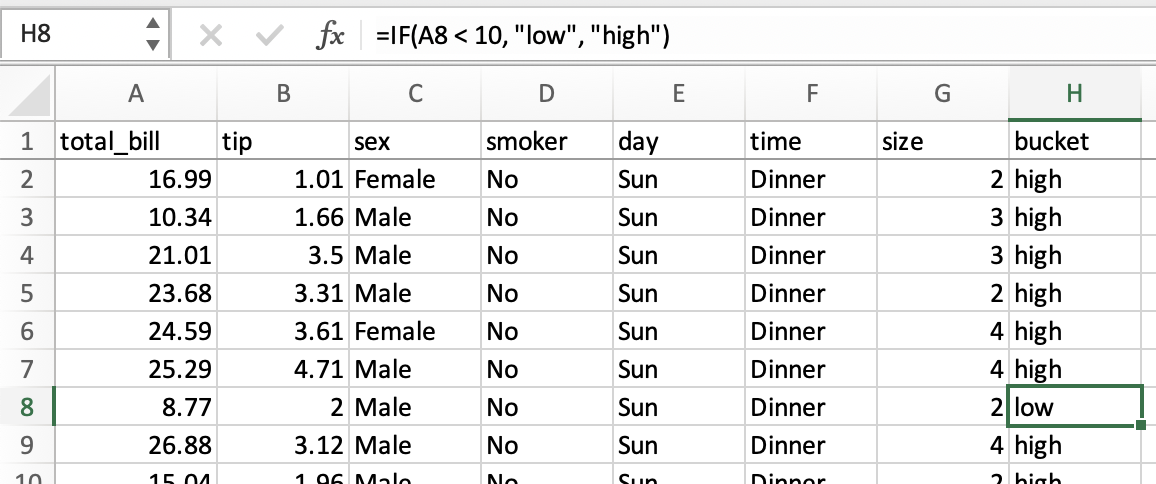

If/then logic¶

Let’s say we want to make a bucket column with values of low and high, based on whether

the total_bill is less or more than $10.

In spreadsheets, logical comparison can be done with conditional formulas.

We’d use a formula of =IF(A2 < 10, "low", "high"), dragged to all cells in a new bucket

column.

The same operation in pandas can be accomplished using

the where method from numpy.

In [18]: tips["bucket"] = np.where(tips["total_bill"] < 10, "low", "high")

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [18], line 1

----> 1 tips["bucket"] = np.where(tips["total_bill"] < 10, "low", "high")

NameError: name 'tips' is not defined

In [19]: tips

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [19], line 1

----> 1 tips

NameError: name 'tips' is not defined

Date functionality¶

This section will refer to “dates”, but timestamps are handled similarly.

We can think of date functionality in two parts: parsing, and output. In spreadsheets, date values are generally parsed automatically, though there is a DATEVALUE function if you need it. In pandas, you need to explicitly convert plain text to datetime objects, either while reading from a CSV or once in a DataFrame.

Once parsed, spreadsheets display the dates in a default format, though the format can be changed.

In pandas, you’ll generally want to keep dates as datetime objects while you’re doing

calculations with them. Outputting parts of dates (such as the year) is done through date

functions

in spreadsheets, and datetime properties in pandas.

Given date1 and date2 in columns A and B of a spreadsheet, you might have these

formulas:

column |

formula |

|---|---|

|

|

|

|

|

|

|

|

The equivalent pandas operations are shown below.

In [20]: tips["date1"] = pd.Timestamp("2013-01-15")

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [20], line 1

----> 1 tips["date1"] = pd.Timestamp("2013-01-15")

NameError: name 'tips' is not defined

In [21]: tips["date2"] = pd.Timestamp("2015-02-15")

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [21], line 1

----> 1 tips["date2"] = pd.Timestamp("2015-02-15")

NameError: name 'tips' is not defined

In [22]: tips["date1_year"] = tips["date1"].dt.year

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [22], line 1

----> 1 tips["date1_year"] = tips["date1"].dt.year

NameError: name 'tips' is not defined

In [23]: tips["date2_month"] = tips["date2"].dt.month

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [23], line 1

----> 1 tips["date2_month"] = tips["date2"].dt.month

NameError: name 'tips' is not defined

In [24]: tips["date1_next"] = tips["date1"] + pd.offsets.MonthBegin()

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [24], line 1

----> 1 tips["date1_next"] = tips["date1"] + pd.offsets.MonthBegin()

NameError: name 'tips' is not defined

In [25]: tips["months_between"] = tips["date2"].dt.to_period("M") - tips[

....: "date1"

....: ].dt.to_period("M")

....:

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [25], line 1

----> 1 tips["months_between"] = tips["date2"].dt.to_period("M") - tips[

2 "date1"

3 ].dt.to_period("M")

NameError: name 'tips' is not defined

In [26]: tips[

....: ["date1", "date2", "date1_year", "date2_month", "date1_next", "months_between"]

....: ]

....:

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [26], line 1

----> 1 tips[

2 ["date1", "date2", "date1_year", "date2_month", "date1_next", "months_between"]

3 ]

NameError: name 'tips' is not defined

See Time series / date functionality for more details.

Selection of columns¶

In spreadsheets, you can select columns you want by:

Referencing a range from one worksheet into another

Since spreadsheet columns are typically named in a header row, renaming a column is simply a matter of changing the text in that first cell.

The same operations are expressed in pandas below.

Keep certain columns¶

In [27]: tips[["sex", "total_bill", "tip"]]

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [27], line 1

----> 1 tips[["sex", "total_bill", "tip"]]

NameError: name 'tips' is not defined

Drop a column¶

In [28]: tips.drop("sex", axis=1)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [28], line 1

----> 1 tips.drop("sex", axis=1)

NameError: name 'tips' is not defined

Rename a column¶

In [29]: tips.rename(columns={"total_bill": "total_bill_2"})

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [29], line 1

----> 1 tips.rename(columns={"total_bill": "total_bill_2"})

NameError: name 'tips' is not defined

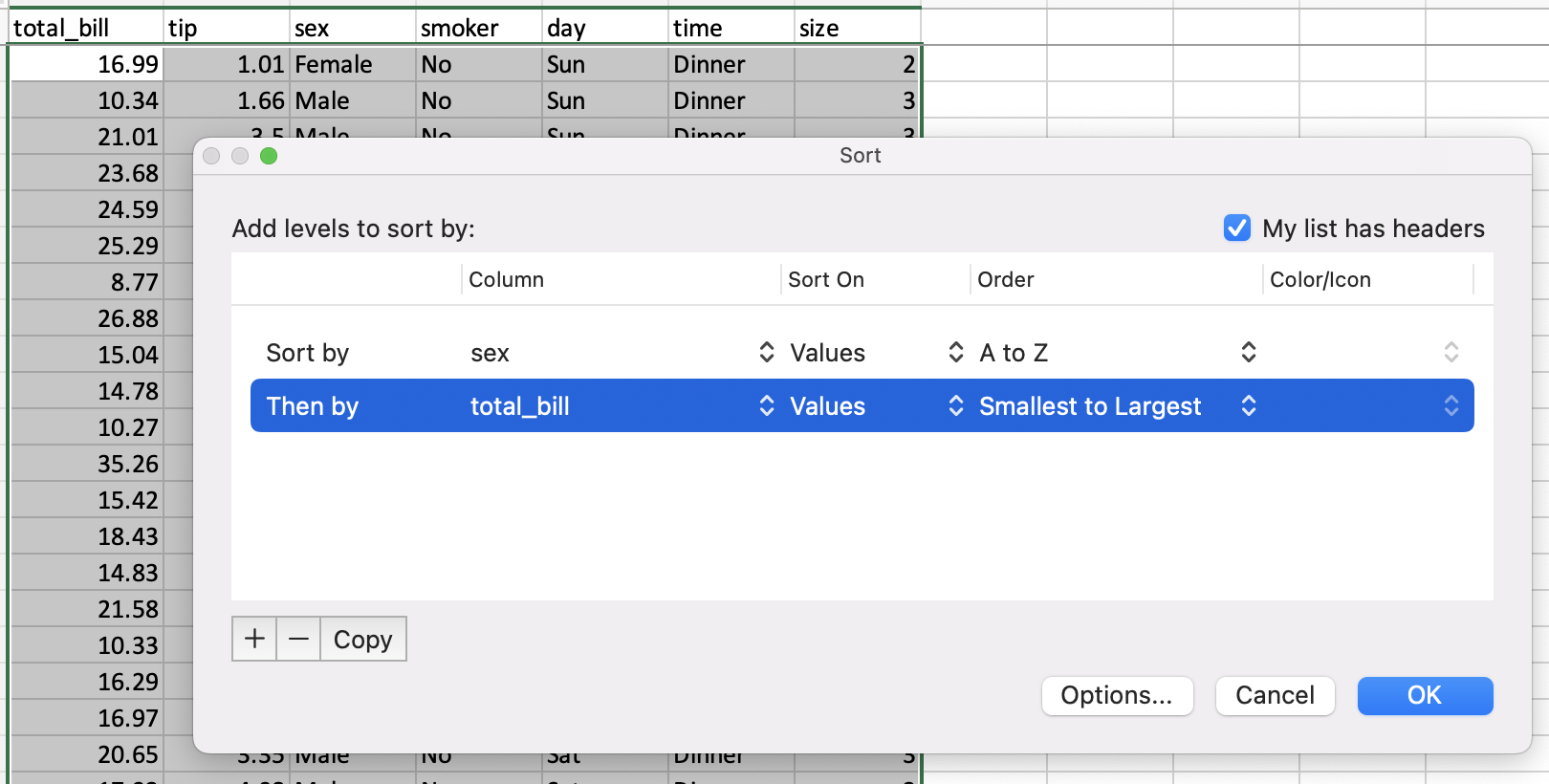

Sorting by values¶

Sorting in spreadsheets is accomplished via the sort dialog.

pandas has a DataFrame.sort_values() method, which takes a list of columns to sort by.

In [30]: tips = tips.sort_values(["sex", "total_bill"])

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [30], line 1

----> 1 tips = tips.sort_values(["sex", "total_bill"])

NameError: name 'tips' is not defined

In [31]: tips

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [31], line 1

----> 1 tips

NameError: name 'tips' is not defined

String processing¶

Finding length of string¶

In spreadsheets, the number of characters in text can be found with the LEN function. This can be used with the TRIM function to remove extra whitespace.

=LEN(TRIM(A2))

You can find the length of a character string with Series.str.len().

In Python 3, all strings are Unicode strings. len includes trailing blanks.

Use len and rstrip to exclude trailing blanks.

In [32]: tips["time"].str.len()

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [32], line 1

----> 1 tips["time"].str.len()

NameError: name 'tips' is not defined

In [33]: tips["time"].str.rstrip().str.len()

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [33], line 1

----> 1 tips["time"].str.rstrip().str.len()

NameError: name 'tips' is not defined

Note this will still include multiple spaces within the string, so isn’t 100% equivalent.

Finding position of substring¶

The FIND

spreadsheet function returns the position of a substring, with the first character being 1.

You can find the position of a character in a column of strings with the Series.str.find()

method. find searches for the first position of the substring. If the substring is found, the

method returns its position. If not found, it returns -1. Keep in mind that Python indexes are

zero-based.

In [34]: tips["sex"].str.find("ale")

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [34], line 1

----> 1 tips["sex"].str.find("ale")

NameError: name 'tips' is not defined

Extracting substring by position¶

Spreadsheets have a MID formula for extracting a substring from a given position. To get the first character:

=MID(A2,1,1)

With pandas you can use [] notation to extract a substring

from a string by position locations. Keep in mind that Python

indexes are zero-based.

In [35]: tips["sex"].str[0:1]

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [35], line 1

----> 1 tips["sex"].str[0:1]

NameError: name 'tips' is not defined

Extracting nth word¶

In Excel, you might use the Text to Columns Wizard for splitting text and retrieving a specific column. (Note it’s possible to do so through a formula as well.)

The simplest way to extract words in pandas is to split the strings by spaces, then reference the word by index. Note there are more powerful approaches should you need them.

In [36]: firstlast = pd.DataFrame({"String": ["John Smith", "Jane Cook"]})

In [37]: firstlast["First_Name"] = firstlast["String"].str.split(" ", expand=True)[0]

In [38]: firstlast["Last_Name"] = firstlast["String"].str.rsplit(" ", expand=True)[1]

In [39]: firstlast

Out[39]:

String First_Name Last_Name

0 John Smith John Smith

1 Jane Cook Jane Cook

Changing case¶

Spreadsheets provide UPPER, LOWER, and PROPER functions for converting text to upper, lower, and title case, respectively.

The equivalent pandas methods are Series.str.upper(), Series.str.lower(), and

Series.str.title().

In [40]: firstlast = pd.DataFrame({"string": ["John Smith", "Jane Cook"]})

In [41]: firstlast["upper"] = firstlast["string"].str.upper()

In [42]: firstlast["lower"] = firstlast["string"].str.lower()

In [43]: firstlast["title"] = firstlast["string"].str.title()

In [44]: firstlast

Out[44]:

string upper lower title

0 John Smith JOHN SMITH john smith John Smith

1 Jane Cook JANE COOK jane cook Jane Cook

Merging¶

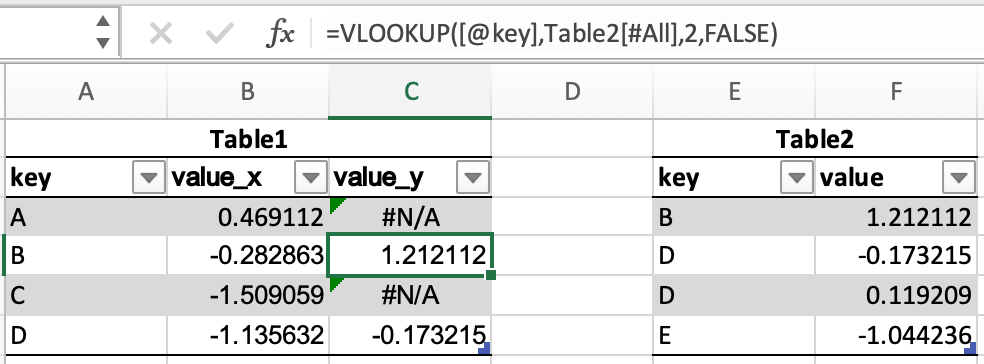

The following tables will be used in the merge examples:

In [45]: df1 = pd.DataFrame({"key": ["A", "B", "C", "D"], "value": np.random.randn(4)})

In [46]: df1

Out[46]:

key value

0 A 0.469112

1 B -0.282863

2 C -1.509059

3 D -1.135632

In [47]: df2 = pd.DataFrame({"key": ["B", "D", "D", "E"], "value": np.random.randn(4)})

In [48]: df2

Out[48]:

key value

0 B 1.212112

1 D -0.173215

2 D 0.119209

3 E -1.044236

In Excel, there are merging of tables can be done through a VLOOKUP.

pandas DataFrames have a merge() method, which provides similar functionality. The

data does not have to be sorted ahead of time, and different join types are accomplished via the

how keyword.

In [49]: inner_join = df1.merge(df2, on=["key"], how="inner")

In [50]: inner_join

Out[50]:

key value_x value_y

0 B -0.282863 1.212112

1 D -1.135632 -0.173215

2 D -1.135632 0.119209

In [51]: left_join = df1.merge(df2, on=["key"], how="left")

In [52]: left_join

Out[52]:

key value_x value_y

0 A 0.469112 NaN

1 B -0.282863 1.212112

2 C -1.509059 NaN

3 D -1.135632 -0.173215

4 D -1.135632 0.119209

In [53]: right_join = df1.merge(df2, on=["key"], how="right")

In [54]: right_join

Out[54]:

key value_x value_y

0 B -0.282863 1.212112

1 D -1.135632 -0.173215

2 D -1.135632 0.119209

3 E NaN -1.044236

In [55]: outer_join = df1.merge(df2, on=["key"], how="outer")

In [56]: outer_join

Out[56]:

key value_x value_y

0 A 0.469112 NaN

1 B -0.282863 1.212112

2 C -1.509059 NaN

3 D -1.135632 -0.173215

4 D -1.135632 0.119209

5 E NaN -1.044236

merge has a number of advantages over VLOOKUP:

The lookup value doesn’t need to be the first column of the lookup table

If multiple rows are matched, there will be one row for each match, instead of just the first

It will include all columns from the lookup table, instead of just a single specified column

It supports more complex join operations

Other considerations¶

Fill Handle¶

Create a series of numbers following a set pattern in a certain set of cells. In a spreadsheet, this would be done by shift+drag after entering the first number or by entering the first two or three values and then dragging.

This can be achieved by creating a series and assigning it to the desired cells.

In [57]: df = pd.DataFrame({"AAA": [1] * 8, "BBB": list(range(0, 8))})

In [58]: df

Out[58]:

AAA BBB

0 1 0

1 1 1

2 1 2

3 1 3

4 1 4

5 1 5

6 1 6

7 1 7

In [59]: series = list(range(1, 5))

In [60]: series

Out[60]: [1, 2, 3, 4]

In [61]: df.loc[2:5, "AAA"] = series

In [62]: df

Out[62]:

AAA BBB

0 1 0

1 1 1

2 1 2

3 2 3

4 3 4

5 4 5

6 1 6

7 1 7

Drop Duplicates¶

Excel has built-in functionality for removing duplicate values.

This is supported in pandas via drop_duplicates().

In [63]: df = pd.DataFrame(

....: {

....: "class": ["A", "A", "A", "B", "C", "D"],

....: "student_count": [42, 35, 42, 50, 47, 45],

....: "all_pass": ["Yes", "Yes", "Yes", "No", "No", "Yes"],

....: }

....: )

....:

In [64]: df.drop_duplicates()

Out[64]:

class student_count all_pass

0 A 42 Yes

1 A 35 Yes

3 B 50 No

4 C 47 No

5 D 45 Yes

In [65]: df.drop_duplicates(["class", "student_count"])

Out[65]:

class student_count all_pass

0 A 42 Yes

1 A 35 Yes

3 B 50 No

4 C 47 No

5 D 45 Yes

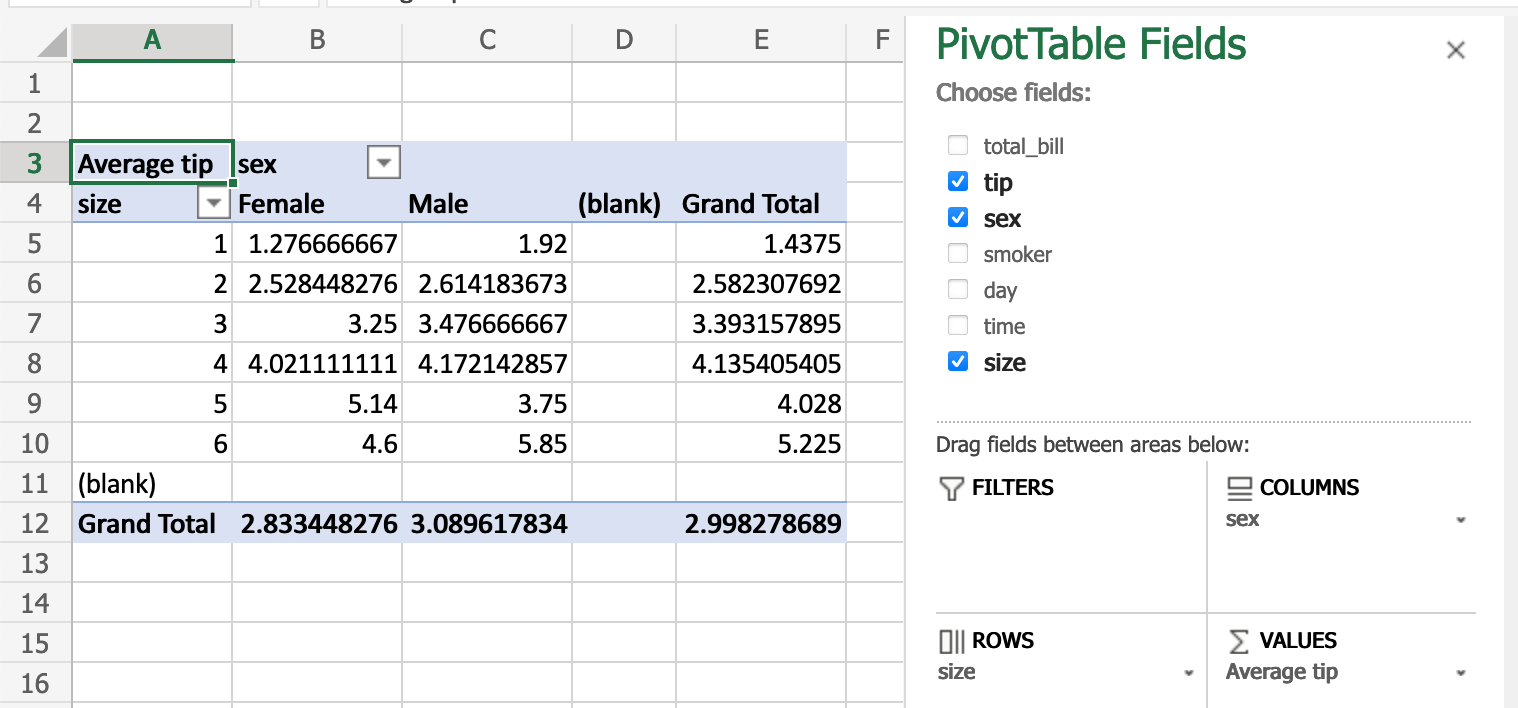

Pivot Tables¶

PivotTables

from spreadsheets can be replicated in pandas through Reshaping and pivot tables. Using the tips dataset again,

let’s find the average gratuity by size of the party and sex of the server.

In Excel, we use the following configuration for the PivotTable:

The equivalent in pandas:

In [66]: pd.pivot_table(

....: tips, values="tip", index=["size"], columns=["sex"], aggfunc=np.average

....: )

....:

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [66], line 2

1 pd.pivot_table(

----> 2 tips, values="tip", index=["size"], columns=["sex"], aggfunc=np.average

3 )

NameError: name 'tips' is not defined

Adding a row¶

Assuming we are using a RangeIndex (numbered 0, 1, etc.), we can use concat() to add a row to the bottom of a DataFrame.

In [67]: df

Out[67]:

class student_count all_pass

0 A 42 Yes

1 A 35 Yes

2 A 42 Yes

3 B 50 No

4 C 47 No

5 D 45 Yes

In [68]: new_row = pd.DataFrame([["E", 51, True]],

....: columns=["class", "student_count", "all_pass"])

....:

In [69]: pd.concat([df, new_row])

Out[69]:

class student_count all_pass

0 A 42 Yes

1 A 35 Yes

2 A 42 Yes

3 B 50 No

4 C 47 No

5 D 45 Yes

0 E 51 True

Find and Replace¶

Excel’s Find dialog

takes you to cells that match, one by one. In pandas, this operation is generally done for an

entire column or DataFrame at once through conditional expressions.

In [70]: tips

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [70], line 1

----> 1 tips

NameError: name 'tips' is not defined

In [71]: tips == "Sun"

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [71], line 1

----> 1 tips == "Sun"

NameError: name 'tips' is not defined

In [72]: tips["day"].str.contains("S")

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [72], line 1

----> 1 tips["day"].str.contains("S")

NameError: name 'tips' is not defined

pandas’ replace() is comparable to Excel’s Replace All.

In [73]: tips.replace("Thu", "Thursday")

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In [73], line 1

----> 1 tips.replace("Thu", "Thursday")

NameError: name 'tips' is not defined