Next: New Features in 7.4.0 Up: Main Reference Previous: New Features in 9.2.0 Contents Index

Each autochanger that you have defined in an Autochanger resource in the Storage daemon's bacula-sd.conf file, must have a corresponding Autochanger resource defined in the Director's bacula-dir.conf file. Normally you will already have a Storage resource that points to the Storage daemon's Autochanger resource. Thus you need only to change the name of the Storage resource to Autochanger. In addition the Autochanger = yes directive is not needed in the Director's Autochanger resource, since the resource name is Autochanger, the Director already knows that it represents an autochanger.

In addition to the above change (Storage to Autochanger), you must modify any additional Storage resources that correspond to devices that are part of the Autochanger device. Instead of the previous Autochanger = yes directive they should be modified to be Autochanger = xxx where you replace the xxx with the name of the Autochanger.

For example, in the bacula-dir.conf file:

Autochanger { # New resource

Name = Changer-1

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO-Changer-1

Media Type = LTO-4

Maximum Concurrent Jobs = 50

}

Storage {

Name = Changer-1-Drive0

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO4_1_Drive0

Media Type = LTO-4

Maximum Concurrent Jobs = 5

Autochanger = Changer-1 # New directive

}

Storage {

Name = Changer-1-Drive1

Address = cibou.company.com

SDPort = 9103

Password = "xxxxxxxxxx"

Device = LTO4_1_Drive1

Media Type = LTO-4

Maximum Concurrent Jobs = 5

Autochanger = Changer-1 # New directive

}

...

Note that Storage resources Changer-1-Drive0 and Changer-1-Drive1 are not required since they make up part of an autochanger, and normally, Jobs refer only to the Autochanger resource. However, by referring to those Storage definitions in a Job, you will use only the indicated drive. This is not normally what you want to do, but it is very useful and often used for reserving a drive for restores. See the Storage daemon example .conf below and the use of AutoSelect = no.

So, in summary, the changes are:

Max Virtual Full Interval

and

Virtual Full Backup Pool

The Max Virtual Full Interval directive should behave similar to the Max Full Interval, but for Virtual Full jobs. If Bacula sees that there has not been a Full backup in Max Virtual Full Interval time then it will upgrade the job to Virtual Full. If you have both Max Full Interval and Max Virtual Full Interval set then Max Full Interval should take precedence.

The Virtual Full Backup Pool directive allows one to change the pool as well. You probably want to use these two directives in conjunction with each other but that may depend on the specifics of one's setup. If you set the Max Full Interval without setting Max Virtual Full Interval then Bacula will use whatever the "default" pool is set to which is the same behavior as with the Max Full Interval.

In Bacula version 9.0.0, we have added a new Directive named Backups To Keep that permits you to implement Progressive Virtual Fulls within Bacula. Sometimes this feature is known as Incremental Forever with Consolidation.

To implement the Progressive Virtual Full feature, simply add the Backups To Keep directive to your Virtual Full backup Job resource. The value specified on the directive indicates the number of backup jobs that should not be merged into the Virtual Full (i.e. the number of backup jobs that should remain after the Virtual Full has completed. The default is zero, which reverts to a standard Virtual Full than consolidates all the backup jobs that it finds.

Backups To Keep = 30

where the value (30 in the above figure and example) is the number of backups to retain. When this directive is present during a Virtual Full (it is ignored for other Job types), it will look for the most recent Full backup that has more subsequent backups than the value specified. In the above example the Job will simply terminate unless there is a Full back followed by at least 31 backups of either level Differential or Incremental.

Assuming that the last Full backup is followed by 32 Incremental backups, a Virtual Full will be run that consolidates the Full with the first two Incrementals that were run after the Full. The result is that you will end up with a Full followed by 30 Incremental backups. The Job Resource in bacula-dir.conf to accomplish this would be:

Job {

Name = "VFull"

Type = Backup

Level = VirtualFull

Client = "my-fd"

File Set = "FullSet"

Accurate = Yes

Backups To Keep = 10

}

However, it should be noted that Virtual Full jobs are not compatible with any plugins that you may be using.

Device {

Name = ...

Archive Device = /dev/nst0

Alert Command = "/opt/bacula/scripts/tapealert %l"

Control Device = /dev/sg1 # must be SCSI ctl for /dev/nst0

...

}

In addition the Control Device directive in the Storage Daemon's conf file must be specified in each Device resource to permit Bacula to detect tape alerts on a specific devices (normally only tape devices).

Once the above mentioned two directives (Alert Command and Control Device) are in place in each of your Device resources, Bacula will check for tape alerts at two points:

At each of the above times, Bacula will call the new tapealert script, which uses the tapeinfo program. The tapeinfo utility is part of the apt sg3-utils and rpm sg3_utils packages that must be installed on your systems. Then after each alert that Bacula finds for that drive, Bacula will emit a Job message that is either INFO, WARNING, or FATAL depending on the designation in the Tape Alert published by the T10 Technical Committee on SCSI Storage Interfaces (www.t10.org). For the specification, please see: www.t10.org/ftp/t10/document.02/02-142r0.pdf

As a somewhat extreme example, if tape alerts 3, 5, and 39 are set, you will get the following output in your backup job.

17-Nov 13:37 rufus-sd JobId 1: Error: block.c:287

Write error at 0:17 on device "tape"

(/home/kern/bacula/k/regress/working/ach/drive0)

Vol=TestVolume001. ERR=Input/output error.

17-Nov 13:37 rufus-sd JobId 1: Fatal error: Alert:

Volume="TestVolume001" alert=3: ERR=The operation has stopped because

an error has occurred while reading or writing data which the drive

cannot correct. The drive had a hard read or write error

17-Nov 13:37 rufus-sd JobId 1: Fatal error: Alert:

Volume="TestVolume001" alert=5: ERR=The tape is damaged or the drive

is faulty. Call the tape drive supplier helpline. The drive can no

longer read data from the tape

17-Nov 13:37 rufus-sd JobId 1: Warning: Disabled Device "tape"

(/home/kern/bacula/k/regress/working/ach/drive0) due to tape alert=39.

17-Nov 13:37 rufus-sd JobId 1: Warning: Alert: Volume="TestVolume001"

alert=39: ERR=The tape drive may have a fault. Check for availability

of diagnostic information and run extended diagnostics if applicable.

The drive may have had a failure which may be identified by stored

diagnostic information or by running extended diagnostics (eg Send

Diagnostic). Check the tape drive users manual for instructions on

running extended diagnostic tests and retrieving diagnostic data.

Without the tape alert feature enabled, you would only get the first error message above, which is the error return Bacula received when it gets the error. Notice also, that in the above output the alert number 5 is a critical error, which causes two things to happen. First the tape drive is disabled, and second the Job is failed.

If you attempt to run another Job using the Device that has been disabled, you will get a message similar to the following:

17-Nov 15:08 rufus-sd JobId 2: Warning:

Device "tape" requested by DIR is disabled.

and the Job may be failed if no other drive can be found.

Once the problem with the tape drive has been corrected, you can clear the tape alerts and re-enable the device with the Bacula bconsole command such as the following:

enable Storage=Tape

Note, when you enable the device, the list of prior tape alerts for that drive will be discarded.

Since is is possible to miss tape alerts, Bacula maintains a temporary list of the last 8 alerts, and each time Bacula calls the tapealert script, it will keep up to 10 alert status codes. Normally there will only be one or two alert errors for each call to the tapealert script.

Once a drive has one or more tape alerts, you can see them by using the bconsole status command as follows:

status storage=Tapewhich produces the following output:

Device Vtape is "tape" (/home/kern/bacula/k/regress/working/ach/drive0)

mounted with:

Volume: TestVolume001

Pool: Default

Media type: tape

Device is disabled. User command.

Total Bytes Read=0 Blocks Read=1 Bytes/block=0

Positioned at File=1 Block=0

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

alert=Hard Error

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

alert=Read Failure

Warning Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

alert=Diagnostics Required

if you want to see the long message associated with each of the alerts, simply set the debug level to 10 or more and re-issue the status command: setdebug storage=Tape level=10 status storage=Tape

...

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

flags=0x0 alert=The operation has stopped because an error has occurred

while reading or writing data which the drive cannot correct. The drive had

a hard read or write error

Critical Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001"

flags=0x0 alert=The tape is damaged or the drive is faulty. Call the tape

drive supplier helpline. The drive can no longer read data from the tape

Warning Alert: at 17-Nov-2016 15:08:01 Volume="TestVolume001" flags=0x1

alert=The tape drive may have a fault. Check for availability of diagnostic

information and run extended diagnostics if applicable. The drive may

have had a failure which may be identified by stored diagnostic information

or by running extended diagnostics (eg Send Diagnostic). Check the tape

drive users manual for instructions on running extended diagnostic tests

and retrieving diagnostic data.

...

The next time you enable the Device by either using bconsole or you restart the Storage Daemon, all the saved alert messages will be discarded.

Tape Alerts numbered 14,20,29,30,31,38, and 39 will cause Bacula to disable the drive.

Please note certain tape alerts such as 14 have multiple effects (disable the Volume and disable the drive).

This directive is used to specify a list of directories that can be accessed by a restore session. Without this directive, a restricted console cannot restore any file. Multiple directories names may be specified by separating them with commas, and/or by specifying multiple DirectoryACL directives. For example, the directive may be specified as:

DirectoryACL = /home/bacula/, "/etc/", "/home/test/*"

With the above specification, the console can access the following directories:

But not to the following files or directories:

If a directory starts with a Windows pattern (ex: c:/), Bacula will automatically ignore the case when checking directory names.

The bconsole list commands can now be used safely from a restricted bconsole session. The information displayed will respect the ACL configured for the Console session. For example, if a restricted Console has access to JobA, JobB and JobC, information about JobD will not appear in the list jobs command.

# cat /opt/bacula/etc/bacula-dir.conf

...

Console {

Name = fd-cons # Name of the FD Console

Password = yyy

...

ClientACL = localhost-fd # everything allowed

RestoreClientACL = test-fd # restore only

BackupClientACL = production-fd # backup only

}

The ClientACL directive takes precedence over the RestoreClientACL and the BackupClientACL. In the Console resource resource above, it means that the bconsole linked to the Console named "fd-cons" will be able to run:

At the restore time, jobs for client “localhost-fd”, “test-fd” and “production-fd” will be available.

If *all* is set for ClientACL, backup and restore will be allowed for all clients, despite the use of RestoreClientACL or "BackupClientACL.

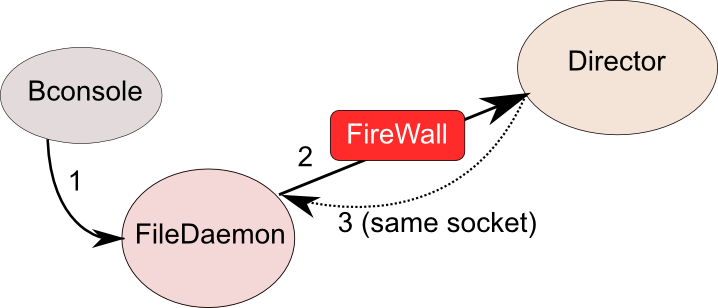

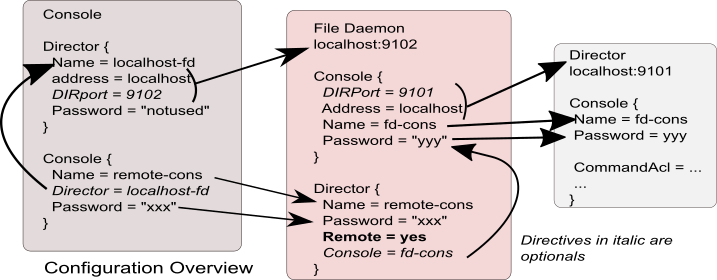

The flow of information is shown in the picture below:

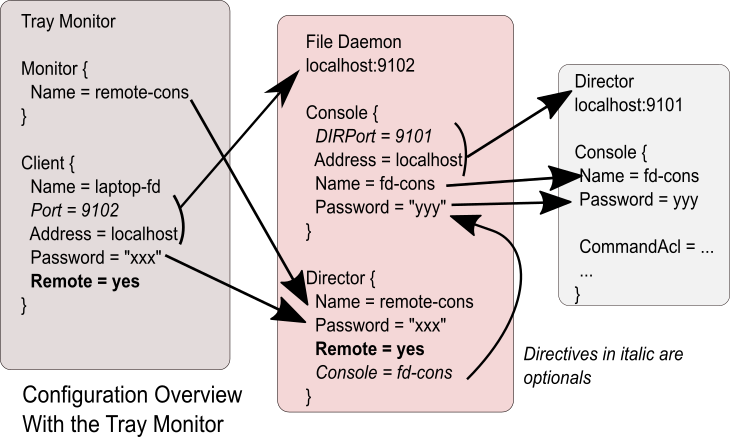

In order to ensure security, there are a number of new directives that must be enabled in the new tray-monitor, the File Daemon and in the Director. A typical configuration might look like the following:

# cat /opt/bacula/etc/bacula-dir.conf

...

Console {

Name = fd-cons # Name of the FD Console

Password = yyy

# These commands are used by the tray-monitor, it is possible to restrict

CommandACL = run, restore, wait, .status, .jobs, .clients

CommandACL = .storages, .pools, .filesets, .defaults, .estimate

# Adapt for your needs

jobacl = *all*

poolacl = *all*

clientacl = *all*

storageacl = *all*

catalogacl = *all*

filesetacl = *all*

}

# cat /opt/bacula/etc/bacula-fd.conf

...

Console { # Console to connect the Director

Name = fd-cons

DIRPort = 9101

address = localhost

Password = "yyy"

}

Director {

Name = remote-cons # Name of the tray monitor/bconsole

Password = "xxx" # Password of the tray monitor/bconsole

Remote = yes # Allow to use send commands to the Console defined

}

cat /opt/bacula/etc/bconsole-remote.conf

....

Director {

Name = localhost-fd

address = localhost # Specify the FD address

DIRport = 9102 # Specify the FD Port

Password = "notused"

}

Console {

Name = remote-cons # Name used in the auth process

Password = "xxx"

}

cat ~/.bacula-tray-monitor.conf

Monitor {

Name = remote-cons

}

Client {

Name = localhost-fd

address = localhost # Specify the FD address

Port = 9102 # Specify the FD Port

Password = "xxx"

Remote = yes

}

A more detailed description with complete examples is available in chapter ![[*]](crossref.png) .

.

A new tray monitor has been added to the 9.0 release, the tray monitor offers the following features:

The Tray Monitor can scan periodically a specific directory “Command Directory” and process “*.bcmd” files to find jobs to run.

The format of the “file.bcmd” command file is the following:

<component name>:<run command> <component name>:<run command> ... <component name> = string <run command> = string (bconsole command line)

For example:

localhost-fd: run job=backup-localhost-fd level=full localhost-dir: run job=BackupCatalog

The command file should contain at least one command. The component specified in the first part of the command line should be defined in the tray monitor. Once the command file is detected by the tray monitor, a popup is displayed to the user and it is possible for the user to cancel the job directly.

The file can be created with tools such as “cron” or the “task scheduler” on Windows. It is possible to verify the network connection at that time to avoid network errors.

#!/bin/sh if ping -c 1 director &> /dev/null then echo "my-dir: run job=backup" > /path/to/commands/backup.bcmd fi

Since Bacula version 8.4.1, it has been possible to have a Verify Job configured with level=Data that will reread all records from a job and optionally check the size and the checksum of all files. Starting with

Bacula version 9.0, it is now possible to use the accurate option to check catalog records at the same time. When using a Verify job with level=Data and accurate=yes can replace the level=VolumeToCatalog option.

For more information on how to setup a Verify Data job, see label:verifyvolumedata.

To run a Verify Job with the accurate option, it is possible to set the option in the Job definition or set use the accurate=yes on the command line.

* run job=VerifyData jobid=10 accurate=yes

It is now possible to send the list of all saved files to a Messages resource with the saved message type. It is not recommended to send this flow of information to the director and/or the catalog when the client FileSet is pretty large. To avoid side effects, the all keyword doesn't include the saved message type. The saved message type should be explicitly set.

# cat /opt/bacula/etc/bacula-fd.conf

...

Messages {

Name = Standard

director = mydirector-dir = all, !terminate, !restored, !saved

append = /opt/bacula/working/bacula-fd.log = all, saved, restored

}

The new .estimate command can be used to get statistics about a job to run. The command uses the database to approximate the size and the number of files of the next job. On a PostgreSQL database, the command uses regression slope to compute values. On MySQL, where these statistical functions are not available, the command uses a simple “average” estimation. The correlation number is given for each value.

*.estimate job=backup level=I nbjob=0 corrbytes=0 jobbytes=0 corrfiles=0 jobfiles=0 duration=0 job=backup *.estimate job=backup level=F level=F nbjob=1 corrbytes=0 jobbytes=210937774 corrfiles=0 jobfiles=2545 duration=0 job=backup

After the reception of a signal, traceback and lockdump information are now stored in the same file.

The list jobs bconsole command now accepts new command line options:

The “@tall” command allows logging all input/output from a console session.

*@tall /tmp/log *st dir ...

The list jobs bconsole command now accepts new command line options:

The “@tall” command allows logging all input/output from a console session.

*@tall /tmp/log *st dir ...

Job {

Name = Migrate-Job

Type = Migrate

...

RunAfter = "echo New JobId is %I"

}

In Bacula version 9.0 and later, we introduced a new .api version to help external tools to parse various Bacula bconsole output.

The api_opts option can use the following arguments:

| C | Clear current options |

| tn | Use a specific time format (1 ISO format, 2 Unix Timestamp, 3 Default Bacula time format) |

| sn | Use a specific separator between items (new line by default). |

| Sn | Use a specific separator between objects (new line by default). |

| o | Convert all keywords to lowercase and convert all non isalpha characters to _ |

.api 2 api_opts=t1s43S35 .status dir running ================================== jobid=10 job=AJob ...

In Bacula version 9.0 and later, we introduced a new options parameter for the setdebug bconsole command.

The following arguments to the new option parameter are available to control debug functions.

| 0 | Clear debug flags |

| i | Turn off, ignore bwrite() errors on restore on File Daemon |

| d | Turn off decomp of BackupRead() streams on File Daemon |

| t | Turn on timestamps in traces |

| T | Turn off timestamps in traces

|

| c | Truncate trace file if trace file is activated

|

| l | Turn on recording events on P() and V() |

| p | Turn on the display of the event ring when doing a backtrace |

The following command will enable debugging for the File Daemon, truncate an existing trace file, and turn on timestamps when writing to the trace file.

* setdebug level=10 trace=1 options=ct fd

It is now possible to use a class of debug messages called tags to control the debug output of Bacula daemons.

| all | Display all debug messages |

| bvfs | Display BVFS debug messages |

| sql | Display SQL related debug messages |

| memory | Display memory and poolmem allocation messages |

| scheduler | Display scheduler related debug messages |

* setdebug level=10 tags=bvfs,sql,memory * setdebug level=10 tags=!bvfs # bacula-dir -t -d 200,bvfs,sql

The tags option is composed of a list of tags. Tags are separated by “,” or “+” or “-” or “!”. To disable a specific tag, use “-” or “!” in front of the tag. Note that more tags are planned for future versions.

Comm Compression = no

This directive can appear in the following resources:

bacula-dir.conf: Director resource bacula-fd.conf Client (or FileDaemon) resource bacula-sd.conf: Storage resource bconsole.conf: Console resource bat.conf: Console resource

In many cases, the volume of data transmitted across the communications line can be reduced by a factor of three when this directive is enabled (default) In the case that the compression is not effective, Bacula turns it off on a. record by record basis.

If you are backing up data that is already compressed the comm line compression will not be effective, and you are likely to end up with an average compression ratio that is very small. In this case, Bacula reports None in the Job report.

This feature is available if you have Bacula Community produced binaries and the Aligned Volumes plugin.

/opt/bacula/scripts/baculabackupreport 24

I have put the above line in my scripts/delete_catalog_backup script so that it will be mailed to me nightly.

The message identifier will be kept unique for each message and once assigned to a message it will not change even if the text of the message changes. This means that the message identifier will be the same no matter what language the text is displayed in, and more importantly, it will allow us to make listing of the messages with in some cases, additional explanation or instructions on how to correct the problem. All this will take several years since it is a lot of work and requires some new programs that are not yet written to manage these message identifiers.

The format of the message identifier is:

[AAnnnn]where A is an upper case character and nnnn is a four digit number, where the first character indicates the software component (daemon); the second letter indicates the severity, and the number is unique for a given component and severity.

For example:

[SF0001]

The first character representing the component at the current time one of the following:

S Storage daemon D Director F File daemon

The second character representing the severity or level can be:

A Abort F Fatal E Error W Warning S Security I Info D Debug O OK (i.e. operation completed normally)

So in the example above [SF0001] indicates it is a message id, because of the brackets and because it is at the beginning of the message, and that it was generated by the Storage daemon as a fatal error.

As mentioned above it will take some time to implement these message ids everywhere, and over time we may add more component letters and more severity levels as needed.