Table of Contents

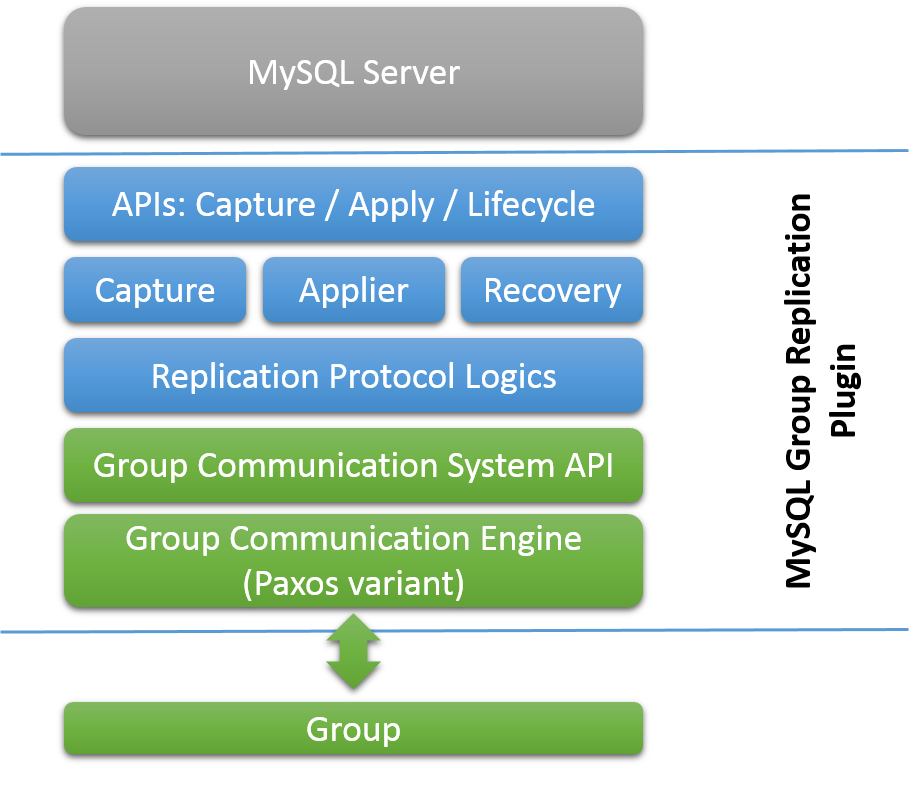

This chapter explains MySQL Group Replication and how to install, configure and monitor groups. MySQL Group Replication is a MySQL Server plugin that enables you to create elastic, highly-available, fault-tolerant replication topologies.

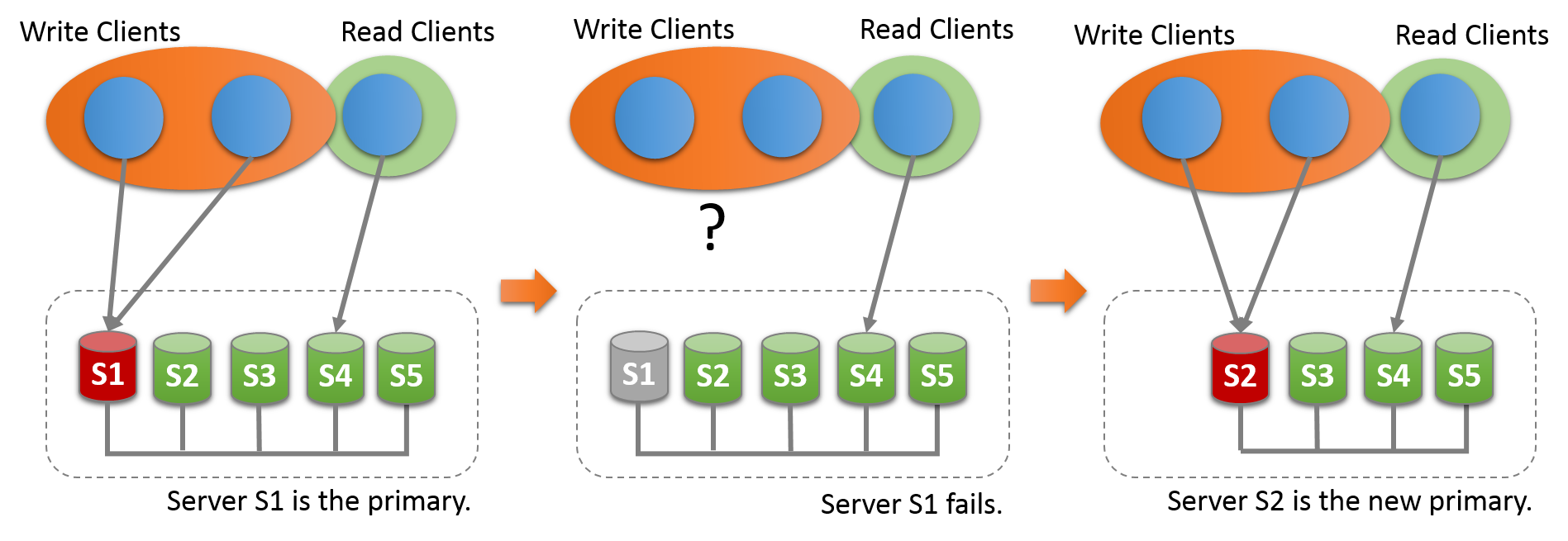

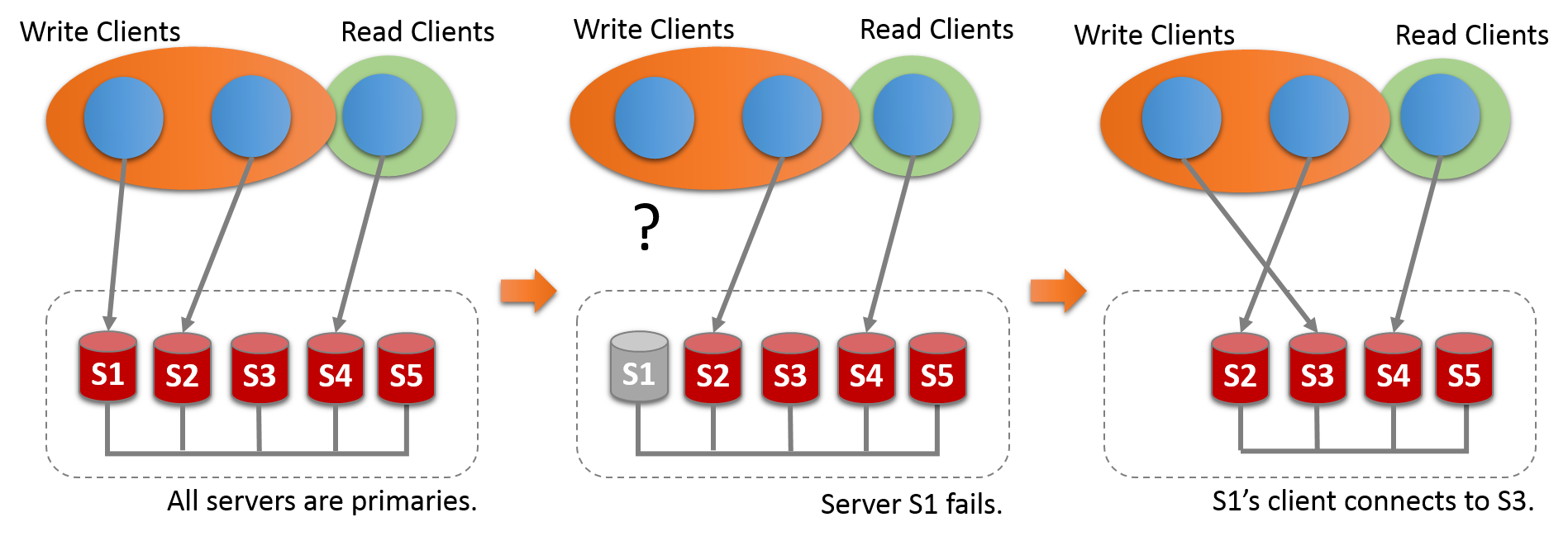

Groups can operate in a single-primary mode with automatic primary election, where only one server accepts updates at a time. Alternatively, for more advanced users, groups can be deployed in multi-primary mode, where all servers can accept updates, even if they are issued concurrently.

There is a built-in group membership service that keeps the view of the group consistent and available for all servers at any given point in time. Servers can leave and join the group and the view is updated accordingly. Sometimes servers can leave the group unexpectedly, in which case the failure detection mechanism detects this and notifies the group that the view has changed. This is all automatic.

The chapter is structured as follows:

Section 21.1, “Group Replication Background” provides an introduction to groups and how Group Replication works.

Section 21.2, “Getting Started” explains how to configure multiple MySQL Server instances to create a group.

Section 21.3, “Monitoring Group Replication” explains how to monitor a group.

Section 21.5, “Group Replication Security” explains how to secure a group.

Section 21.9, “Group Replication Technical Details” provides in-depth information about how Group Replication works.

This section provides background information on MySQL Group Replication.

The most common way to create a fault-tolerant system is to resort to making components redundant, in other words the component can be removed and the system should continue to operate as expected. This creates a set of challenges that raise complexity of such systems to a whole different level. Specifically, replicated databases have to deal with the fact that they require maintenance and administration of several servers instead of just one. Moreover, as servers are cooperating together to create the group several other classic distributed systems problems have to be dealt with, such as network partitioning or split brain scenarios.

Therefore, the ultimate challenge is to fuse the logic of the database and data replication with the logic of having several servers coordinated in a consistent and simple way. In other words, to have multiple servers agreeing on the state of the system and the data on each and every change that the system goes through. This can be summarized as having servers reaching agreement on each database state transition, so that they all progress as one single database or alternatively that they eventually converge to the same state. Meaning that they need to operate as a (distributed) state machine.

MySQL Group Replication provides distributed state machine replication with strong coordination between servers. Servers coordinate themselves automatically when they are part of the same group. The group can operate in a single-primary mode with automatic primary election, where only one server accepts updates at a time. Alternatively, for more advanced users the group can be deployed in multi-primary mode, where all servers can accept updates, even if they are issued concurrently. This power comes at the expense of applications having to work around the limitations imposed by such deployments.

There is a built-in group membership service that keeps the view of the group consistent and available for all servers at any given point in time. Servers can leave and join the group and the view is updated accordingly. Sometimes servers can leave the group unexpectedly, in which case the failure detection mechanism detects this and notifies the group that the view has changed. This is all automatic.

For a transaction to commit, the majority of the group have to agree on the order of a given transaction in the global sequence of transactions. Deciding to commit or abort a transaction is done by each server individually, but all servers make the same decision. If there is a network partition, resulting in a split where members are unable to reach agreement, then the system does not progress until this issue is resolved. Hence there is also a built-in, automatic, split-brain protection mechanism.

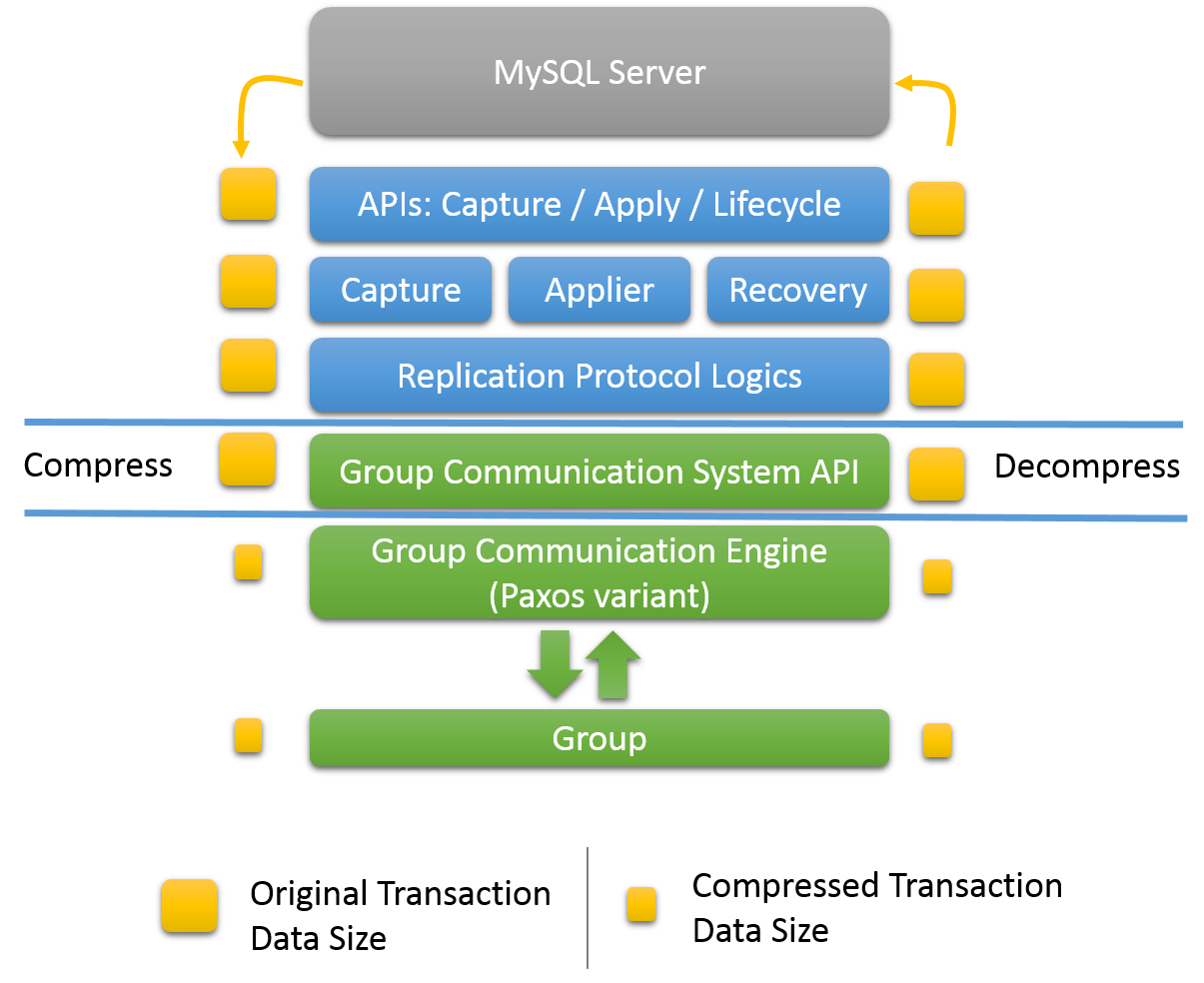

All of this is powered by the provided group communication protocols. These provide a failure detection mechanism, a group membership service, and safe and completely ordered message delivery. All these properties are key to creating a system which ensures that data is consistently replicated across the group of servers. At the very core of this technology lies an implementation of the Paxos algorithm. It acts as the group communication systems engine.

Before getting into the details of MySQL Group Replication, this section introduces some background concepts and an overview of how things work. This provides some context to help understand what is required for Group Replication and what the differences are between classic asynchronous MySQL Replication and Group Replication.

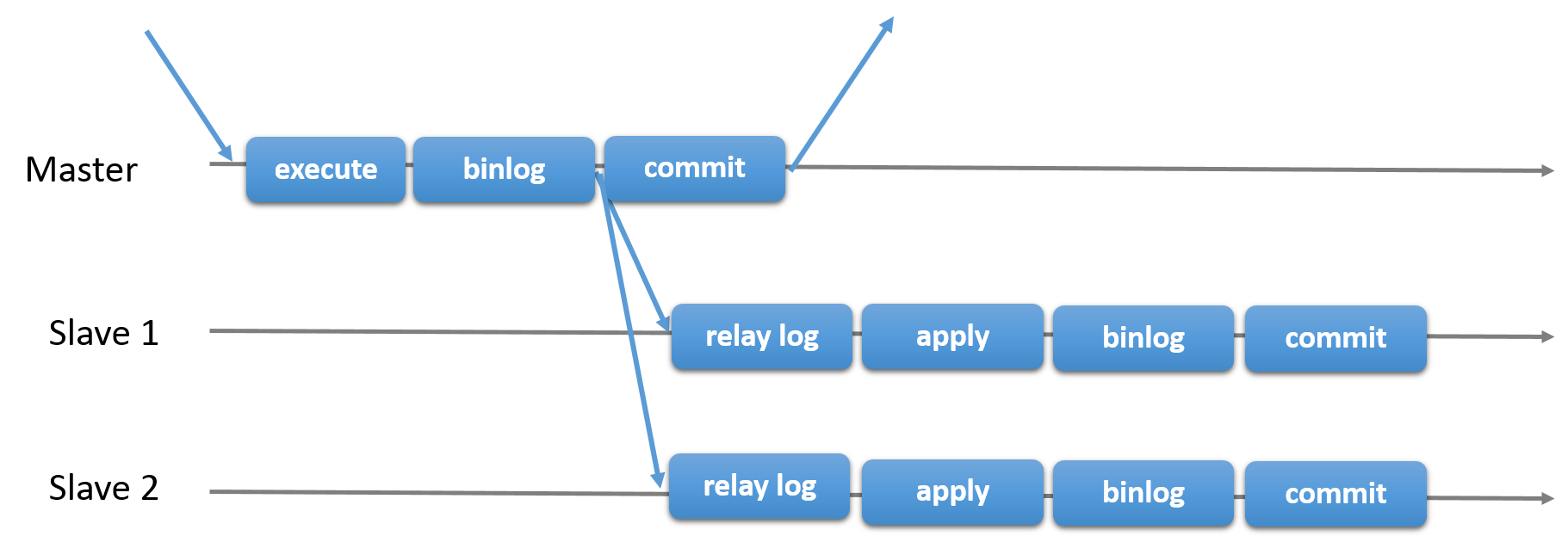

Traditional MySQL Replication provides a simple Primary-Secondary approach to replication. There is a primary (master) and there is one or more secondaries (slaves). The primary executes transactions, commits them and then they are later (thus asynchronously) sent to the secondaries to be either re-executed (in statement-based replication) or applied (in row-based replication). It is a shared-nothing system, where all servers have a full copy of the data by default.

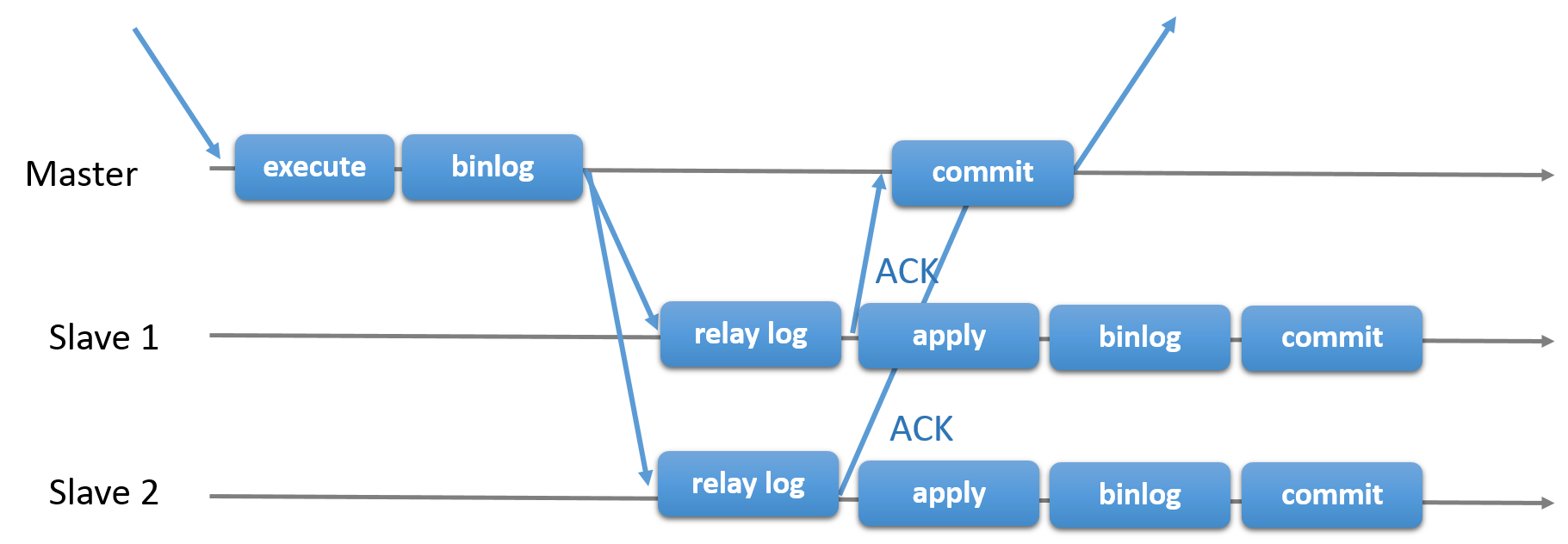

There is also semisynchronous replication, which adds one synchronization step to the protocol. This means that the Primary waits, at commit time, for the secondary to acknowledge that it has received the transaction. Only then does the Primary resume the commit operation.

In the two pictures above, you can see a diagram of the classic asynchronous MySQL Replication protocol (and its semisynchronous variant as well). Blue arrows represent messages exchanged between servers or messages exchanged between servers and the client application.

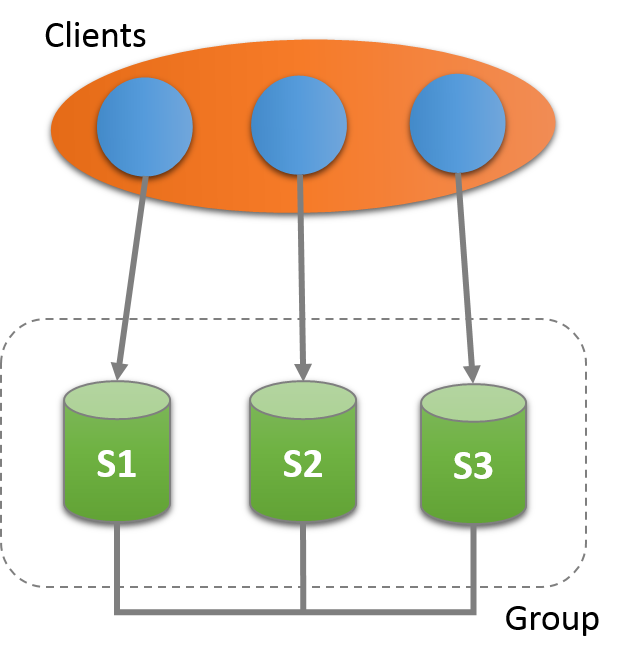

Group Replication is a technique that can be used to implement fault-tolerant systems. The replication group is a set of servers that interact with each other through message passing. The communication layer provides a set of guarantees such as atomic message and total order message delivery. These are very powerful properties that translate into very useful abstractions that one can resort to build more advanced database replication solutions.

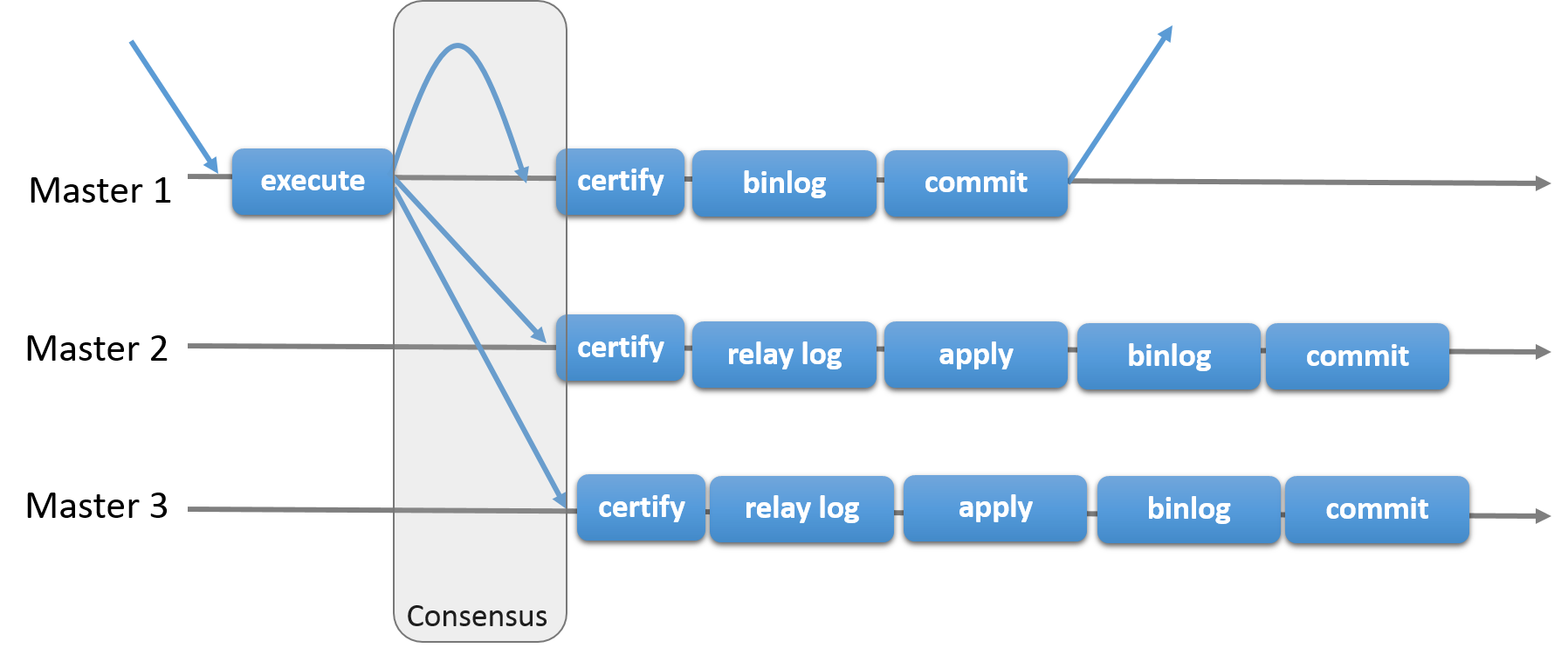

MySQL Group Replication builds on top of such properties and abstractions and implements a multi-master update everywhere replication protocol. In essence, a replication group is formed by multiple servers and each server in the group may execute transactions independently. But all read-write (RW) transactions commit only after they have been approved by the group. Read-only (RO) transactions need no coordination within the group and thus commit immediately. In other words, for any RW transaction the group needs to decide whether it commits or not, thus the commit operation is not a unilateral decision from the originating server. To be precise, when a transaction is ready to commit at the originating server, the server atomically broadcasts the write values (rows changed) and the correspondent write set (unique identifiers of the rows that were updated). Then a global total order is established for that transaction. Ultimately, this means that all servers receive the same set of transactions in the same order. As a consequence, all servers apply the same set of changes in the same order, therefore they remain consistent within the group.

However, there may be conflicts between transactions that execute concurrently on different servers. Such conflicts are detected by inspecting the write sets of two different and concurrent transactions, in a process called certification. If two concurrent transactions, that executed on different servers, update the same row, then there is a conflict. The resolution procedure states that the transaction that was ordered first commits on all servers, whereas the transaction ordered second aborts, and thus is rolled back on the originating server and dropped by the other servers in the group. This is in fact a distributed first commit wins rule.

Finally, Group Replication is a shared-nothing replication scheme where each server has its own entire copy of the data.

The Figure above depicts the MySQL Group Replication protocol and by comparing it to MySQL Replication (or even MySQL semisynchronous replication) you can see some differences. Note that some underlying consensus and Paxos related messages are missing from this picture for the sake of clarity.

Group Replication enables you to create fault-tolerant systems with redundancy by replicating the system state throughout a set of servers. Consequently, even if some of the servers fail, as long it is not all or a majority, the system is still available, and all it could have degraded performance or scalability, it is still available. Server failures are isolated and independent. They are tracked by a group membership service which relies on a distributed failure detector that is able to signal when any servers leave the group, either voluntarily or due to an unexpected halt. There is a distributed recovery procedure to ensure that when servers join the group they are brought up to date automatically. There is no need for server fail-over, and the multi-master update everywhere nature ensures that not even updates are blocked in the event of a single server failure. Therefore MySQL Group Replication guarantees that the database service is continuously available.

It is important to understand that although the database service is available, in the event of a server crash, those clients connected to it must be redirected, or failed over, to a different server. This is not something Group Replication attempts to resolve. A connector, load balancer, router, or some form of middleware are more suitable to deal with this issue.

To summarize, MySQL Group Replication provides a highly available, highly elastic, dependable MySQL service.

The following examples are typical use cases for Group Replication.

Elastic Replication - Environments that require a very fluid replication infrastructure, where the number of servers has to grow or shrink dynamically and with as few side-effects as possible. For instance, database services for the cloud.

Highly Available Shards - Sharding is a popular approach to achieve write scale-out. Use MySQL Group Replication to implement highly available shards, where each shard maps to a replication group.

Alternative to Master-Slave replication - In certain situations, using a single master server makes it a single point of contention. Writing to an entire group may prove more scalable under certain circumstances.

Autonomic Systems - Additionally, you can deploy MySQL Group Replication purely for the automation that is built into the replication protocol (described already in this and previous chapters).

This section presents details about some of the services that Group Replication builds on.

There is a failure detection mechanism provided that is able to find and report which servers are silent and as such assumed to be dead. At a high level, the failure detector is a distributed service that provides information about which servers may be dead (suspicions). Later if the group agrees that the suspicions are probably true, then the group decides that a given server has indeed failed. This means that the remaining members in the group take a coordinated decision to exclude a given member.

Suspicions are triggered when servers go mute. When server A does not receive messages from server B during a given period, a timeout occurs and a suspicion is raised.

If a server gets isolated from the rest of the group, then it suspects that all others have failed. Being unable to secure agreement with the group (as it cannot secure a quorum), its suspicion does not have consequences. When a server is isolated from the group in this way, it is unable to execute any local transactions.

MySQL Group Replication relies on a group membership service. This is built into the plugin. It defines which servers are online and participating in the group. The list of online servers is often referred to as a view. Therefore, every server in the group has a consistent view of which are the members participating actively in the group at a given moment in time.

Servers have to agree not only on transaction commits, but also which is the current view. Therefore, if servers agree that a new server becomes part of the group, then the group itself is reconfigured to integrate that server in it, triggering a view change. The opposite also happens, if a server leaves the group, voluntarily or not, then the group dynamically rearranges its configuration and a view change is triggered.

Note though that when a member leaves voluntarily, it first initiates a dynamic group reconfiguration. This triggers a procedure, where all members have to agree on the new view without the leaving server. However, if a member leaves involuntarily (for example it has stopped unexpectedly or the network connection is down) then the failure detection mechanism realizes this fact and a reconfiguration of the group is proposed, this one without the failed member. As mentioned this requires agreement from the majority of servers in the group. If the group is not able to reach agreement (for example it partitioned in such a way that there is no majority of servers online), then the system is not be able to dynamically change the configuration and as such, blocks to prevent a split-brain situation. Ultimately, this means that the administrator needs to step in and fix this.

MySQL Group Replication builds on an implementation of the Paxos

distributed algorithm to provide distributed coordination

between servers. As such, it requires a majority of servers to

be active to reach quorum and thus make a decision. This has

direct impact on the number of failures the system can tolerate

without compromising itself and its overall functionality. The

number of servers (n) needed to tolerate f

failures is then n = 2 x f + 1.

In practice this means that to tolerate one failure the group must have three servers in it. As such if one server fails, there are still two servers to form a majority (two out of three) and allow the system to continue to make decisions automatically and progress. However, if a second server fails involuntarily, then the group (with one server left) blocks, because there is no majority to reach a decision.

The following is a small table illustrating the formula above.

Group Size

Majority

Instant Failures Tolerated

1

1

0

2

2

0

3

2

1

4

3

1

5

3

2

6

4

2

7

4

3

The next Chapter covers technical aspects of Group Replication.

MySQL Group Replication is provided as a plugin to MySQL server, and each server in a group requires configuration and installation of the plugin. This section provides a detailed tutorial with the steps required to create a replication group with at least three servers.

Each of the server instances in a group can run on an independent physical machine, or on the same machine. This section explains how to create a replication group with three MySQL Server instances on one physical machine. This means that three data directories are needed, one per server instance, and that you need to configure each instance independently.

This tutorial explains how to get and deploy MySQL Server with the Group Replication plugin, how to configure each server instance before creating a group, and how to use Performance Schema monitoring to verify that everything is working correctly.

The first step is to deploy three instances of MySQL Server.

Group Replication is a built-in MySQL plugin provided with MySQL

Server 5.7.17 and later. For more background information on

MySQL plugins, see Section 5.6, “MySQL Server Plugins”. This

procedure assumes that MySQL Server was downloaded and unpacked

into the directory named mysql-5.7. The

following procedure uses one physical machine, therefore each

MySQL server instance requires a specific data directory for the

instance. Create the data directories in a directory named

data and initialize each one.

mkdir datamysql-5.7/bin/mysqld --initialize-insecure --basedir=$PWD/mysql-5.7 --datadir=$PWD/data/s1mysql-5.7/bin/mysqld --initialize-insecure --basedir=$PWD/mysql-5.7 --datadir=$PWD/data/s2mysql-5.7/bin/mysqld --initialize-insecure --basedir=$PWD/mysql-5.7 --datadir=$PWD/data/s3

Inside data/s1, data/s2,

data/s3 is an initialized data directory,

containing the mysql system database and related tables and much

more. To learn more about the initialization procedure, see

Section 2.9.1.1, “Initializing the Data Directory Manually Using mysqld”.

Do not use --initialize-insecure in

production environments, it is only used here to simplify the

tutorial. For more information on security settings, see

Section 21.5, “Group Replication Security”.

This section explains the configuration settings required for MySQL Server instances that you want to use for Group Replication. For background information, see Section 21.7.2, “Group Replication Limitations”.

To install and use the Group Replication plugin you must configure the MySQL Server instance correctly. It is recommended to store the configuration in the instance's configuration file. See Section 4.2.6, “Using Option Files” for more information. Unless stated otherwise, what follows is the configuration for the first instance in the group, referred to as s1 in this procedure. The following section shows an example server configuration.

[mysqld] # server configuration datadir=<full_path_to_data>/data/s1 basedir=<full_path_to_bin>/mysql-5.7/ port=24801 socket=<full_path_to_sock_dir>/s1.sock

These settings configure MySQL server to use the data directory created earlier and which port the server should open and start listening for incoming connections.

The non-default port of 24801 is used because in this tutorial the three server instances use the same hostname. In a setup with three different machines this would not be required.

The following settings configure replication according to the MySQL Group Replication requirements.

server_id=1 gtid_mode=ON enforce_gtid_consistency=ON master_info_repository=TABLE relay_log_info_repository=TABLE binlog_checksum=NONE log_slave_updates=ON log_bin=binlog binlog_format=ROW

These settings configure the server to use the unique identifier number 1, to enable global transaction identifiers and to store replication metadata in system tables instead of files. Additionally, it instructs the server to turn on binary logging, use row-based format and disable binary log event checksums. For more details see Section 21.7.2, “Group Replication Limitations”.

At this point the my.cnf file ensures

that the server is configured and is instructed to instantiate

the replication infrastructure under a given configuration.

The following section configures the Group Replication

settings for the server.

transaction_write_set_extraction=XXHASH64 loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa" loose-group_replication_start_on_boot=off loose-group_replication_local_address= "127.0.0.1:24901" loose-group_replication_group_seeds= "127.0.0.1:24901,127.0.0.1:24902,127.0.0.1:24903" loose-group_replication_bootstrap_group= off

The loose- prefix used for the

group_replication variables above instructs the server to

continue to start if the Group Replication plugin has not

been loaded at the time the server is started.

Line 1 instructs the server that for each transaction it has to collect the write set and encode it as a hash using the XXHASH64 hashing algorithm.

Line 2 tells the plugin that the group that it is joining, or creating, is named "aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa".

Line 3 instructs the plugin to not start operations automatically when the server starts.

Line 4 tells the plugin to use the IP address 127.0.0.1, or localhost, and port 24901 for incoming connections from other members in the group.

ImportantThe server listens on this port for member-to-member connections. This port must not be used for user applications at all, it must be reserved for communication between different members of the group while running Group Replication.

The local address configured by

group_replication_local_addressmust be accessible to all group members. For example, if each server instance is on a different machine use the IP and port of the machine, such as 10.0.0.1:33061. The recommended port forgroup_replication_local_addressis 33061, but in this tutorial we use three server instances running on one machine, thus ports 24901 to 24903 are used.Line 5 tells the plugin that the following members on those hosts and ports should be contacted in case it needs to join the group. These are seed members, which are used when this member wants to connect to the group. Upon joining the server contacts one of them first (seed) and then it asks the group to reconfigure to allow the joiner to be accepted in the group. Note that this option does not need to list all members in the group, but rather a list of servers that should be contacted in case this server wishes to join the group.

The server that starts the group does not make use of this option, since it is the initial server and as such, it is in charge of bootstrapping the group. The second server joining asks the one and only member in the group to join and then the group expands. The third server joining can ask any of these two to join, and then the group expands again. Subsequent servers repeat this procedure when joining.

WarningWhen joining multiple servers at the same time, make sure that they point to seed members that are already in the group. Do not use members that are also joining the group as as seeds, because they may not yet be in the group when contacted.

It is good practice to start the bootstrap member first, and let it create the group. Then make it the seed member for the rest of the members that are joining. This ensures that there is a group formed when joining the rest of the members.

Creating a group and joining multiple members at the same time is not supported. It may work, but chances are that the operations race and then the act of joining the group ends up in an error or a time out.

Line 6 instructs the plugin whether to boostrap the group or not.

ImportantThis option must only be used on one server instance at any time, usually the first time you bootstrap the group (or in case the entire group is brought down and back up again). If you bootstrap the group multiple times, for example when multiple server instances have this option set, then they could create an artificial split brain scenario, in which two distinct groups with the same name exist. Disable this option after the first server instance comes online.

Configuration for all servers in the group is quite similar.

You need to change the specifics about each server (for

example server_id,

datadir,

group_replication_local_address).

This is illustrated later in this tutorial.

Group Replication uses the asynchronous replication protocol to

achieve distributed recovery, synchronizing group members before

joining them to the group. The distributed recovery process

relies on a replication channel named

group_replication_recovery which is used to

transfer transactions between group members. Therefore you need

to set up a replication user with the correct permissions so

that Group Replication can establish direct member-to-member

recovery replication channels.

Start the server:

mysql-5.7/bin/mysqld --defaults-file=data/s1/s1.cnf

Create a MySQL user with the

REPLICATION-SLAVE privilege. This

process should not be captured in the

binary log to avoid the changes being propagated to other server

instances. In the following example the user

rpl_user with the password

rpl_pass is shown. When configuring

your servers use a suitable user name and password. Connect to

server s1 and issue the following statements:

mysql> SET SQL_LOG_BIN=0;mysql> CREATE USER rpl_user@'%' IDENTIFIED BY 'rpl_pass';mysql> GRANT REPLICATION SLAVE ON *.* TO rpl_user@'%';mysql> FLUSH PRIVILEGES;mysql> SET SQL_LOG_BIN=1;

Once the user has been configured as above, use the

CHANGE MASTER TO statement to

configure the server to use the given credentials for the

group_replication_recovery replication

channel the next time it needs to recover its state from another

member. Issue the following, replacing

rpl_user and

rpl_pass with the values used when

creating the user.

mysql> CHANGE MASTER TO MASTER_USER='rpl_user', MASTER_PASSWORD='rpl_pass' \\

FOR CHANNEL 'group_replication_recovery';

Distributed recovery is the first step taken by a server that

joins the group. If these credentials are not set correctly as

shown, the server cannot run the recovery process and gain

synchrony with the other group members, and hence ultimately

cannot join the group. Similarly, if the member cannot correctly

identify the other members via the server's

hostname the recovery process can fail. It is

recommended that operating systems running MySQL have a properly

configured unique hostname, either using DNS

or local settings. This hostname can be

verified in the Member_host column of the

performance_schema.replication_group_members

table. If multiple group members externalize a default

hostname set by the operating system, there

is a chance of the member not resolving to the correct member

address and not being able to join the group. In such a

situation use report_host to

configure a unique hostname to be

externalized by each of the servers.

Once server s1 has been configured and started, install the Group Replication plugin. Connect to the server and issue the following command:

INSTALL PLUGIN group_replication SONAME 'group_replication.so';

The mysql.session user must exist before

you can load Group Replication.

mysql.session was added in MySQL version

8.0.2. If your data dictionary was initialized using an

earlier version you must run the

mysql_upgrade procedure. If the upgrade is

not run, Group Replication fails to start with the error

message There was an error when trying to access

the server with user: mysql.session@localhost. Make sure the

user is present in the server and that mysql_upgrade was ran

after a server update..

To check that the plugin was installed successfully, issue

SHOW PLUGINS; and check the output. It should

show something like this:

mysql> SHOW PLUGINS; +----------------------------+----------+--------------------+----------------------+-------------+ | Name | Status | Type | Library | License | +----------------------------+----------+--------------------+----------------------+-------------+ | binlog | ACTIVE | STORAGE ENGINE | NULL | PROPRIETARY | (...) | group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | PROPRIETARY | +----------------------------+----------+--------------------+----------------------+-------------+

To start the group, instruct server s1 to bootstrap the group

and then start Group Replication. This bootstrap should only be

done by a single server, the one that starts the group and only

once. This is why the value of the bootstrap configuration

option was not saved in the configuration file. If it is saved

in the configuration file, upon restart the server automatically

bootstraps a second group with the same name. This would result

in two distinct groups with the same name. The same reasoning

applies to stopping and restarting the plugin with this option

set to ON.

SET GLOBAL group_replication_bootstrap_group=ON; START GROUP_REPLICATION; SET GLOBAL group_replication_bootstrap_group=OFF;

Once the START GROUP_REPLICATION statement

returns, the group has been started. You can check that the

group is now created and that there is one member in it:

mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+---------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+---------------+ | group_replication_applier | ce9be252-2b71-11e6-b8f4-00212844f856 | myhost | 24801 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+---------------+ 1 row in set (0,00 sec)

The information in this table confirms that there is a member in

the group with the unique identifier

ce9be252-2b71-11e6-b8f4-00212844f856, that it

is ONLINE and is at myhost

listening for client connections on port

24801.

For the purpose of demonstrating that the server is indeed in a group and that it is able to handle load, create a table and add some content to it.

mysql>CREATE DATABASE test;mysql>USE test;mysql>CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 TEXT NOT NULL);mysql>INSERT INTO t1 VALUES (1, 'Luis');

Check the content of table t1 and the binary

log.

mysql>SELECT * FROM t1;+----+------+ | c1 | c2 | +----+------+ | 1 | Luis | +----+------+ 1 row in set (0,00 sec) mysql>SHOW BINLOG EVENTS;+---------------+-----+----------------+-----------+-------------+--------------------------------------------------------------------+ | Log_name | Pos | Event_type | Server_id | End_log_pos | Info | +---------------+-----+----------------+-----------+-------------+--------------------------------------------------------------------+ | binlog.000001 | 4 | Format_desc | 1 | 123 | Server ver: 8.0.2-gr080-log, Binlog ver: 4 | | binlog.000001 | 123 | Previous_gtids | 1 | 150 | | | binlog.000001 | 150 | Gtid | 1 | 211 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:1' | | binlog.000001 | 211 | Query | 1 | 270 | BEGIN | | binlog.000001 | 270 | View_change | 1 | 369 | view_id=14724817264259180:1 | | binlog.000001 | 369 | Query | 1 | 434 | COMMIT | | binlog.000001 | 434 | Gtid | 1 | 495 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:2' | | binlog.000001 | 495 | Query | 1 | 585 | CREATE DATABASE test | | binlog.000001 | 585 | Gtid | 1 | 646 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:3' | | binlog.000001 | 646 | Query | 1 | 770 | use `test`; CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 TEXT NOT NULL) | | binlog.000001 | 770 | Gtid | 1 | 831 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:4' | | binlog.000001 | 831 | Query | 1 | 899 | BEGIN | | binlog.000001 | 899 | Table_map | 1 | 942 | table_id: 108 (test.t1) | | binlog.000001 | 942 | Write_rows | 1 | 984 | table_id: 108 flags: STMT_END_F | | binlog.000001 | 984 | Xid | 1 | 1011 | COMMIT /* xid=38 */ | +---------------+-----+----------------+-----------+-------------+--------------------------------------------------------------------+ 15 rows in set (0,00 sec)

As seen above, the database and the table objects were created and their corresponding DDL statements were written to the binary log. Also, the data was inserted into the table and written to the binary log. The importance of the binary log entries is illustrated in the following section when the group grows and distributed recovery is executed as new members try to catch up and become online.

At this point, the group has one member in it, server s1, which has some data in it. It is now time to expand the group by adding the other two servers configured previously.

In order to add a second instance, server s2, first create the

configuration file for it. The configuration is similar to the

one used for server s1, except for things such as the location

of the data directory, the ports that s2 is going to be

listening on or its

server_id. These different

lines are highlighted in the listing below.

[mysqld] # server configuration datadir=<full_path_to_data>/data/s2 basedir=<full_path_to_bin>/mysql-5.7/ port=24802 socket=<full_path_to_sock_dir>/s2.sock # # Replication configuration parameters # server_id=2 gtid_mode=ON enforce_gtid_consistency=ON master_info_repository=TABLE relay_log_info_repository=TABLE binlog_checksum=NONE log_bin=binlog binlog_format=ROW # # Group Replication configuration # transaction_write_set_extraction=XXHASH64 loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa" loose-group_replication_start_on_boot=off loose-group_replication_local_address= "127.0.0.1:24902" loose-group_replication_group_seeds= "127.0.0.1:24901,127.0.0.1:24902,127.0.0.1:24903" loose-group_replication_bootstrap_group= off

Similar to the procedure for server s1, with the configuration file in place you launch the server.

mysql-5.7/bin/mysqld --defaults-file=data/s2/s2.cnf

Then configure the recovery credentials as follows. The commands are the same as used when setting up server s1 as the user is shared within the group. Issue the following statements on s2.

SET SQL_LOG_BIN=0;CREATE USER rpl_user@'%';GRANT REPLICATION SLAVE ON *.* TO rpl_user@'%' IDENTIFIED BY 'rpl_pass';SET SQL_LOG_BIN=1;CHANGE MASTER TO MASTER_USER='rpl_user', MASTER_PASSWORD='rpl_pass' \\ FOR CHANNEL 'group_replication_recovery';

Install the Group Replication plugin and start the process of joining the server to the group. The following example installs the plugin in the same way as used while deploying server s1.

mysql> INSTALL PLUGIN group_replication SONAME 'group_replication.so'; Query OK, 0 rows affected (0,01 sec)

Add server s2 to the group.

mysql> START GROUP_REPLICATION; Query OK, 0 rows affected (44,88 sec)

Unlike the previous steps that were the same as those executed

on s1, here there is a difference in that you do

not issue SET GLOBAL

group_replication_bootstrap_group=ON; before

starting Group Replication, because the group has already been

created and bootstrapped by server s1. At this point server s2

only needs to be added to the already existing group.

When Group Replication starts successfully and the server

joins the group, if the

super_read_only variable is

set to 1 then the

super_read_only variable is

set to 0. By setting

super_read_only to 1 in the

member's configuration file, you can ensure that

servers which fail when starting Group Replication for any

reason do not accept transactions.

Checking the

performance_schema.replication_group_members

table again shows that there are now two

ONLINE servers in the group.

mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+---------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+---------------+ | group_replication_applier | 395409e1-6dfa-11e6-970b-00212844f856 | myhost | 24801 | ONLINE | | group_replication_applier | ac39f1e6-6dfa-11e6-a69d-00212844f856 | myhost | 24802 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+---------------+ 2 rows in set (0,00 sec)

As server s2 is also marked as ONLINE, it must have already caught up with server s1 automatically. Verify that it has indeed synchronized with server s1 as follows.

mysql> SHOW DATABASES LIKE 'test'; +-----------------+ | Database (test) | +-----------------+ | test | +-----------------+ 1 row in set (0,00 sec) mysql> SELECT * FROM test.t1; +----+------+ | c1 | c2 | +----+------+ | 1 | Luis | +----+------+ 1 row in set (0,00 sec) mysql> SHOW BINLOG EVENTS; +---------------+------+----------------+-----------+-------------+--------------------------------------------------------------------+ | Log_name | Pos | Event_type | Server_id | End_log_pos | Info | +---------------+------+----------------+-----------+-------------+--------------------------------------------------------------------+ | binlog.000001 | 4 | Format_desc | 2 | 123 | Server ver: 5.7.17-log, Binlog ver: 4 | | binlog.000001 | 123 | Previous_gtids | 2 | 150 | | | binlog.000001 | 150 | Gtid | 1 | 211 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:1' | | binlog.000001 | 211 | Query | 1 | 270 | BEGIN | | binlog.000001 | 270 | View_change | 1 | 369 | view_id=14724832985483517:1 | | binlog.000001 | 369 | Query | 1 | 434 | COMMIT | | binlog.000001 | 434 | Gtid | 1 | 495 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:2' | | binlog.000001 | 495 | Query | 1 | 585 | CREATE DATABASE test | | binlog.000001 | 585 | Gtid | 1 | 646 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:3' | | binlog.000001 | 646 | Query | 1 | 770 | use `test`; CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 TEXT NOT NULL) | | binlog.000001 | 770 | Gtid | 1 | 831 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:4' | | binlog.000001 | 831 | Query | 1 | 890 | BEGIN | | binlog.000001 | 890 | Table_map | 1 | 933 | table_id: 108 (test.t1) | | binlog.000001 | 933 | Write_rows | 1 | 975 | table_id: 108 flags: STMT_END_F | | binlog.000001 | 975 | Xid | 1 | 1002 | COMMIT /* xid=30 */ | | binlog.000001 | 1002 | Gtid | 1 | 1063 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:5' | | binlog.000001 | 1063 | Query | 1 | 1122 | BEGIN | | binlog.000001 | 1122 | View_change | 1 | 1261 | view_id=14724832985483517:2 | | binlog.000001 | 1261 | Query | 1 | 1326 | COMMIT | +---------------+------+----------------+-----------+-------------+--------------------------------------------------------------------+ 19 rows in set (0,00 sec)

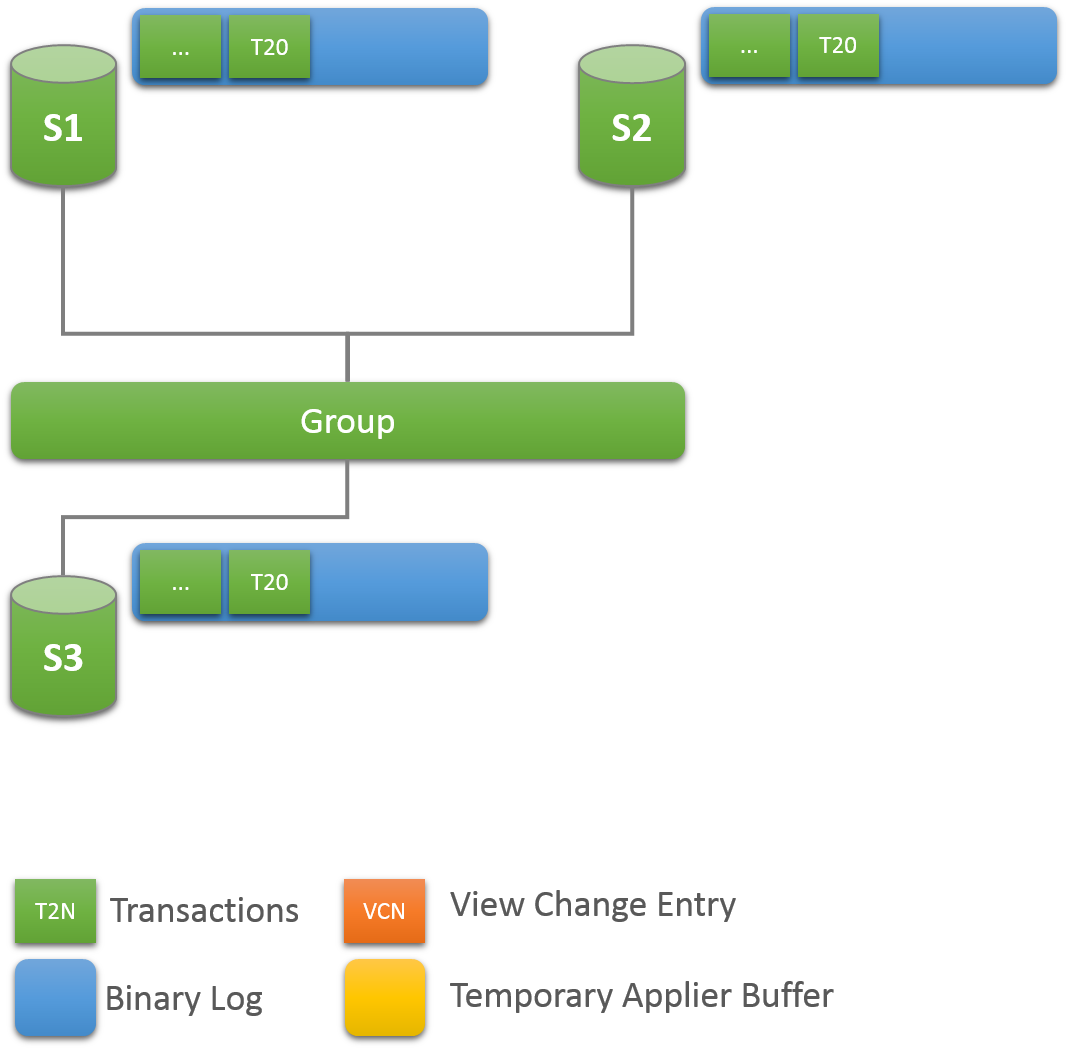

As seen above, the second server has been added to the group and it has replicated the changes from server s1 automatically. According to the distributed recovery procedure, this means that just after joining the group and immediately before being declared online, server s2 has connected to server s1 automatically and fetched the missing data from it. In other words, it copied transactions from the binary log of s1 that it was missing, up to the point in time that it joined the group.

Adding additional instances to the group is essentially the same sequence of steps as adding the second server, except that the configuration has to be changed as it had to be for server s2. To summarise the required commands:

1. Create the configuration file

[mysqld] # server configuration datadir=<full_path_to_data>/data/s3 basedir=<full_path_to_bin>/mysql-5.7/ port=24803 socket=<full_path_to_sock_dir>/s3.sock # # Replication configuration parameters # server_id=3 gtid_mode=ON enforce_gtid_consistency=ON master_info_repository=TABLE relay_log_info_repository=TABLE binlog_checksum=NONE log_bin=binlog binlog_format=ROW # # Group Replication configuration # transaction_write_set_extraction=XXHASH64 loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa" loose-group_replication_start_on_boot=off loose-group_replication_local_address= "127.0.0.1:24903" loose-group_replication_group_seeds= "127.0.0.1:24901,127.0.0.1:24902,127.0.0.1:24903" loose-group_replication_bootstrap_group= off

2. Start the server

mysql-5.7/bin/mysqld --defaults-file=data/s3/s3.cnf

3. Configure the recovery credentials for the group_replication_recovery channel.

SET SQL_LOG_BIN=0; CREATE USER rpl_user@'%'; GRANT REPLICATION SLAVE ON *.* TO rpl_user@'%' IDENTIFIED BY 'rpl_pass'; FLUSH PRIVILEGES; SET SQL_LOG_BIN=1; CHANGE MASTER TO MASTER_USER='rpl_user', MASTER_PASSWORD='rpl_pass' \\ FOR CHANNEL 'group_replication_recovery';

4. Install the Group Replication plugin and start it.

INSTALL PLUGIN group_replication SONAME 'group_replication.so'; START GROUP_REPLICATION;

At this point server s3 is booted and running, has joined the

group and caught up with the other servers in the group.

Consulting the

performance_schema.replication_group_members

table again confirms this is the case.

mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+---------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+---------------+ | group_replication_applier | 395409e1-6dfa-11e6-970b-00212844f856 | myhost | 24801 | ONLINE | | group_replication_applier | 7eb217ff-6df3-11e6-966c-00212844f856 | myhost | 24803 | ONLINE | | group_replication_applier | ac39f1e6-6dfa-11e6-a69d-00212844f856 | myhost | 24802 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+---------------+ 3 rows in set (0,00 sec)

Issuing this same query on server s2 or server s1 yields the same result. Also, you can verify that server s3 has also caught up:

mysql> SHOW DATABASES LIKE 'test'; +-----------------+ | Database (test) | +-----------------+ | test | +-----------------+ 1 row in set (0,00 sec) mysql> SELECT * FROM test.t1; +----+------+ | c1 | c2 | +----+------+ | 1 | Luis | +----+------+ 1 row in set (0,00 sec) mysql> SHOW BINLOG EVENTS; +---------------+------+----------------+-----------+-------------+--------------------------------------------------------------------+ | Log_name | Pos | Event_type | Server_id | End_log_pos | Info | +---------------+------+----------------+-----------+-------------+--------------------------------------------------------------------+ | binlog.000001 | 4 | Format_desc | 3 | 123 | Server ver: 5.7.17-log, Binlog ver: 4 | | binlog.000001 | 123 | Previous_gtids | 3 | 150 | | | binlog.000001 | 150 | Gtid | 1 | 211 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:1' | | binlog.000001 | 211 | Query | 1 | 270 | BEGIN | | binlog.000001 | 270 | View_change | 1 | 369 | view_id=14724832985483517:1 | | binlog.000001 | 369 | Query | 1 | 434 | COMMIT | | binlog.000001 | 434 | Gtid | 1 | 495 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:2' | | binlog.000001 | 495 | Query | 1 | 585 | CREATE DATABASE test | | binlog.000001 | 585 | Gtid | 1 | 646 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:3' | | binlog.000001 | 646 | Query | 1 | 770 | use `test`; CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 TEXT NOT NULL) | | binlog.000001 | 770 | Gtid | 1 | 831 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:4' | | binlog.000001 | 831 | Query | 1 | 890 | BEGIN | | binlog.000001 | 890 | Table_map | 1 | 933 | table_id: 108 (test.t1) | | binlog.000001 | 933 | Write_rows | 1 | 975 | table_id: 108 flags: STMT_END_F | | binlog.000001 | 975 | Xid | 1 | 1002 | COMMIT /* xid=29 */ | | binlog.000001 | 1002 | Gtid | 1 | 1063 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:5' | | binlog.000001 | 1063 | Query | 1 | 1122 | BEGIN | | binlog.000001 | 1122 | View_change | 1 | 1261 | view_id=14724832985483517:2 | | binlog.000001 | 1261 | Query | 1 | 1326 | COMMIT | | binlog.000001 | 1326 | Gtid | 1 | 1387 | SET @@SESSION.GTID_NEXT= 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:6' | | binlog.000001 | 1387 | Query | 1 | 1446 | BEGIN | | binlog.000001 | 1446 | View_change | 1 | 1585 | view_id=14724832985483517:3 | | binlog.000001 | 1585 | Query | 1 | 1650 | COMMIT | +---------------+------+----------------+-----------+-------------+--------------------------------------------------------------------+ 23 rows in set (0,00 sec)

Use the Perfomance Schema tables to monitor Group Replication, assuming that the Performance Schema is enabled. Group Replication adds the following tables:

performance_schema.replication_group_member_statsperformance_schema.replication_group_members

These Perfomance Schema replication tables also show information about Group Replication:

performance_schema.replication_connection_statusperformance_schema.replication_applier_status

The replication channels created by the Group Replication plugin are named:

group_replication_recovery- This channel is used for the replication changes that are related to the distributed recovery phase.group_replication_applier- This channel is used for the incoming changes from the group. This is the channel used to apply transactions coming directly from the group.

The following sections describe the information available in each of these tables.

Each member in a replication group certifies and applies transactions received by the group. Statistics regarding the certifier and applier procedures are useful to understand how the applier queue is growing, how many conflicts have been found, how many transactions were checked, which transactions are committed everywhere, and so on.

The

performance_schema.replication_group_member_stats

table provides group-level information related to the

certification process, and also statistics for the transactions

received and originated by each individual member of the

replication group. The information is shared between all the

server instances that are members of the replication group, so

information on all the group members can be queried from any

member. Note that refreshing of statistics for remote members is

controlled by the message period specified in the

group_replication_flow_control_period

option, so these can differ slightly from the locally collected

statistics for the member where the query is made.

Table 21.1 replication_group_member_stats

Field | Description |

|---|---|

Channel_name | The name of the Group Replication channel. |

View_id | The current view identifier for this group. |

Member_id | The member server UUID. This has a different value for each member in the group. This also serves as a key because it is unique to each member. |

Count_transactions_in_queue | The number of transactions in the queue pending conflict detection checks. Once the transactions have been checked for conflicts, if they pass the check, they are queued to be applied as well. |

Count_transactions_checked | The number of transactions that have been checked for conflicts. |

Count_conflicts_detected | The number of transactions that did not pass the conflict detection check. |

Count_transactions_rows_validating | The current size of the conflict detection database (against which each transaction is certified). |

Transactions_committed_all_members | The transactions that have been successfully committed on all members of the replication group. This is updated at a fixed time interval. |

Last_conflict_free_transaction | The transaction identifier of the last conflict free transaction checked. |

Count_transactions_remote_in_applier_queue | The number of transactions that this member has received from the replication group which are waiting to be applied. |

Count_transactions_remote_applied | The number of transactions that this member has received from the replication group which have been applied. |

Count_transactions_local_proposed | The number of transactions that this member originated and sent to the replication group for coordination. |

Count_transactions_local_rollback | The number of transactions that this member originated that were rolled back after being sent to the replication group. |

These fields are important for monitoring the performance of the members connected in the group. For example, suppose that one of the group’s members is delayed and is not able to keep up to date with the other members of the group. In this case you might see a large number of transactions in the queue. Based on this information, you could decide to either remove the member from the group, or delay the processing of transactions on the other members of the group in order to reduce the number of queued transactions. This information can also help you to decide how to adjust the flow control of the Group Replication plugin.

This table is used for monitoring the status of the different server instances that are tracked in the current view, or in other words are part of the group and as such are tracked by the membership service. The information is shared between all the server instances that are members of the replication group, so information on all the group members can be queried from any member.

Table 21.2 replication_group_members

Field | Description |

|---|---|

Channel_name | The name of the Group Replication channel. |

Member_id | The member server UUID. This has a different value for each member in the group. This also serves as a key because it is unique to each member. |

Member_host | The network address of the group member. |

Member_port | The MySQL connection port on which the group member is listening. |

Member_state |

The current state of the group member (which can be

|

Member_role |

The role of the member in the group, either

|

Member_version | The MySQL version of the group member. |

For more information about the Member_host

value and its impact on the distributed recovery process, see

Section 21.2.1.3, “User Credentials”.

When connected to a group, some fields in this table show information regarding Group Replication. For instance, the transactions that have been received from the group and queued in the applier queue (the relay log).

Table 21.3 replication_connection_status

Field | Description |

|---|---|

Channel_name | The name of the Group Replication channel. |

Group_name | Shows the value of the name of the group. It is always a valid UUID. |

Source_UUID | Shows the identifier for the group. It is similar to the group name and it is used as the UUID for all the transactions that are generated during group replication. |

Service_state | Shows whether the member is a part of the group or not. The possible values of service state can be {ON, OFF and CONNECTING}; |

Received_transaction_set | Transactions in this GTID set have been received by this member of the group. |

The state of the Group Replication related channels and threads can be observed using the regular replication_applier_status table as well. If there are many different worker threads applying transactions, then the worker tables can also be used to monitor what each worker thread is doing.

Table 21.4 replication_applier_status

Field | Description |

|---|---|

Channel_name | The name of the Group Replication channel. |

Service_state | Shows whether the applier service is ON or OFF. |

Remaining_delay | Shows whether there is some applier delay configured. |

Count_transactions_retries | The number of retries performed while applying a transaction. |

Received_transaction_set | Transactions in this GTID set have been received by this member of the group. |

The table replication_group_members is updated

whenever there is a view change, for example when the

configuration of the group is dynamically changed. At that point,

servers exchange some of their metadata to synchronize themselves

and continue to cooperate together.

There are various states that a server instance can be in. If servers are communicating properly, all report the same states for all servers. However, if there is a network partition, or a server leaves the group, then different information may be reported, depending on which server is queried. Note that if the server has left the group then obviously it cannot report updated information about other servers' state. If there is a partition, such that quorum is lost, servers are not able to coordinate between themselves. As a consequence, they cannot guess what the status of different servers is. Therefore, instead of guessing their state they report that some servers are unreachable.

Table 21.5 Server State

Field | Description | Group Synchronized |

|---|---|---|

ONLINE | The member is ready to serve as a fully functional group member, meaning that the client can connect and start executing transactions. | Yes |

RECOVERING | The member is in the process of becoming an active member of the group and is currently going through the recovery process, receiving state information from a donor. | No |

OFFLINE | The plugin is loaded but the member does not belong to any group. | No |

ERROR | The state of the member. Whenever there is an error on the recovery phase or while applying changes, the server enters this state. | No |

UNREACHABLE | Whenever the local failure detector suspects that a given server is not reachable, because maybe it has crashed or was disconnected involuntarily, it shows that server's state as 'unreachable'. | No |

Once an instance enters ERROR state, the

super_read_only option is set

to ON. To leave the ERROR

state you must manually configure the instance with

super_read_only=OFF.

Note that Group Replication is not synchronous, but eventually synchronous. More precisely, transactions are delivered to all group members in the same order, but their execution is not synchronized, meaning that after a transaction is accepted to be committed, each member commits at its own pace.

This section describes the different modes of deploying Group Replication, explains common operations for managing groups and provides information about how to tune your groups .

Group Replication operates in the following different modes:

single-primary mode

multi-primary mode

The default mode is single-primary. It is not possible to have members of the group deployed in different modes, for example one configured in multi-primary mode while another one is in single-primary mode. To switch between modes, the group and not the server, needs to be restarted with a different operating configuration. Regardless of the deployed mode, Group Replication does not handle client-side fail-over, that must be handled by the application itself, a connector or a middleware framework such as a proxy or router.

When deployed in multi-primary mode, statements are checked to ensure they are compatible with the mode. The following checks are made when Group Replication is deployed in multi-master mode:

If a transaction is executed under the SERIALIZABLE isolation level, then its commit fails when synchronizing itself with the group.

If a transaction executes against a table that has foreign keys with cascading constraints, then the transaction fails to commit when synchronizing itself with the group.

These checks can be deactivated by setting the option

group_replication_enforce_update_everywhere_checks

to FALSE. When deploying in single-primary

mode, this option must be set to

FALSE.

In this mode the group has a single-primary server that is set

to read-write mode. All the other members in the group are set

to read-only mode (with

super-read-only=ON

). This happens automatically. The primary is typically the

first server to boostrap the group, all other servers that join

automatically learn about the primary server and are set to read

only.

When in single-primary mode, some of the checks deployed in

multi-primary mode are disabled, because the system enforces

that only a single writer server is in the group at a time. For

example, changes to tables that have cascading foreign keys are

allowed, whereas in multi-primary mode they are not. Upon

primary member failure, an automatic primary election mechanism

chooses the next primary member. The method of choosing the next

primary depends on the server's

server_uuid variable and the

group_replication_member_weight

variable.

In the event that the primary member leaves the group, then an

election is performed and a new primary is chosen from the

remaining servers in the group. This election is performed by

looking at the new view, and ordering the potential new

primaries based on the value of

group_replication_member_weight.

The member with the highest value for

group_replication_member_weight

is elected as the new primary. In the event that multiple

servers have the same

group_replication_member_weight,

the servers are then prioritised based on their

server_uuid in lexicographical

order and by picking the first one. Once a new primary is

elected, it is automatically set to read-write and the other

secondaries remain as secondaries, and as such, read-only.

It is a good practice to wait for the new primary to apply its replication related relay-log before re-routing the client applications to it.

In multi-primary mode, there is no notion of a single primary. There is no need to engage an election procedure since there is no server playing any special role.

All servers are set to read-write mode when joining the group.

The following example shows how to find out which server is currently the primary when deployed in single-primary mode.

mysql> SELECT VARIABLE_VALUE FROM performance_schema.global_status WHERE VARIABLE_NAME= 'group_replication_primary_member'; +--------------------------------------+ | VARIABLE_VALUE | +--------------------------------------+ | 69e1a3b8-8397-11e6-8e67-bf68cbc061a4 | +--------------------------------------+ 1 row in set (0,00 sec)

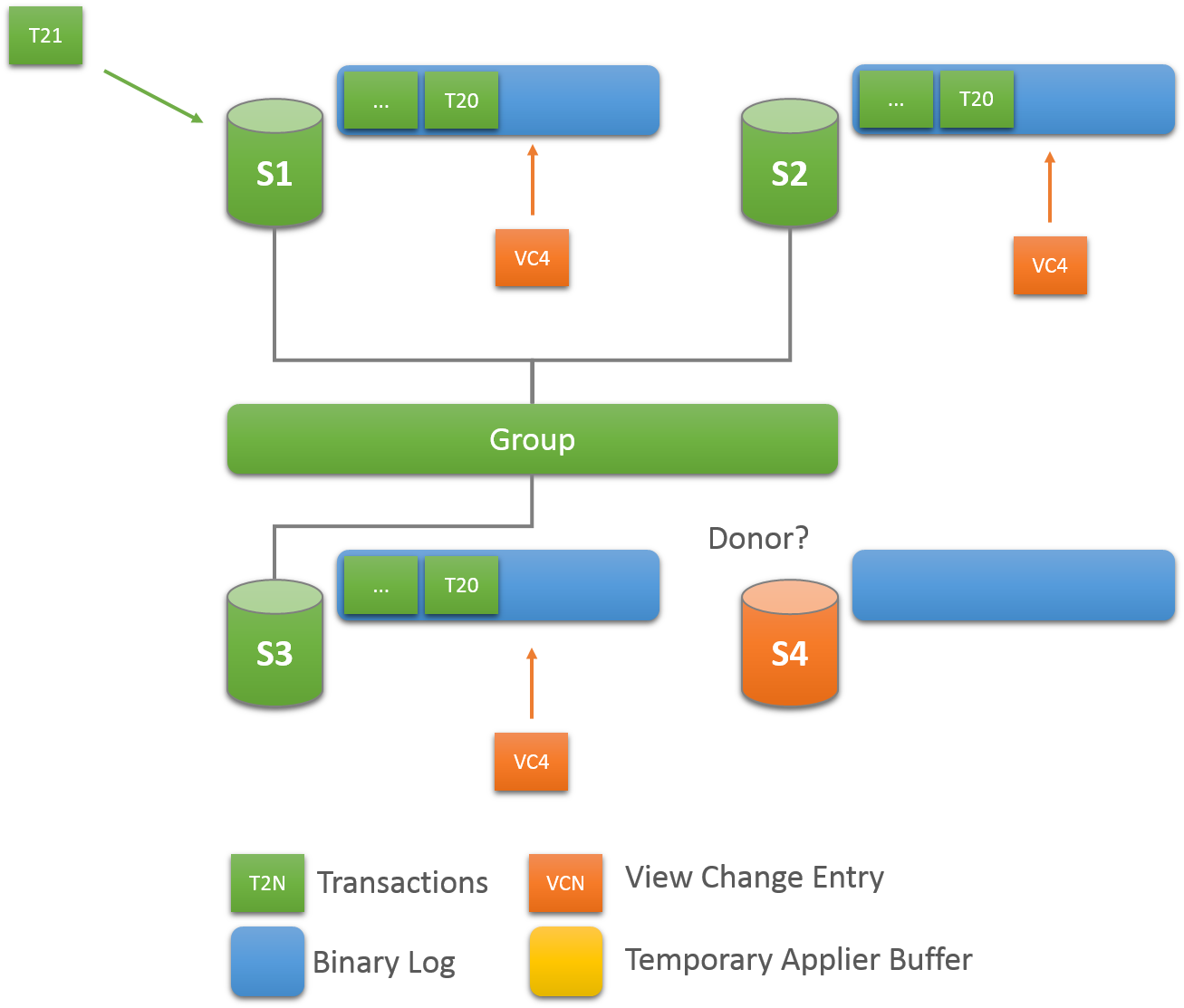

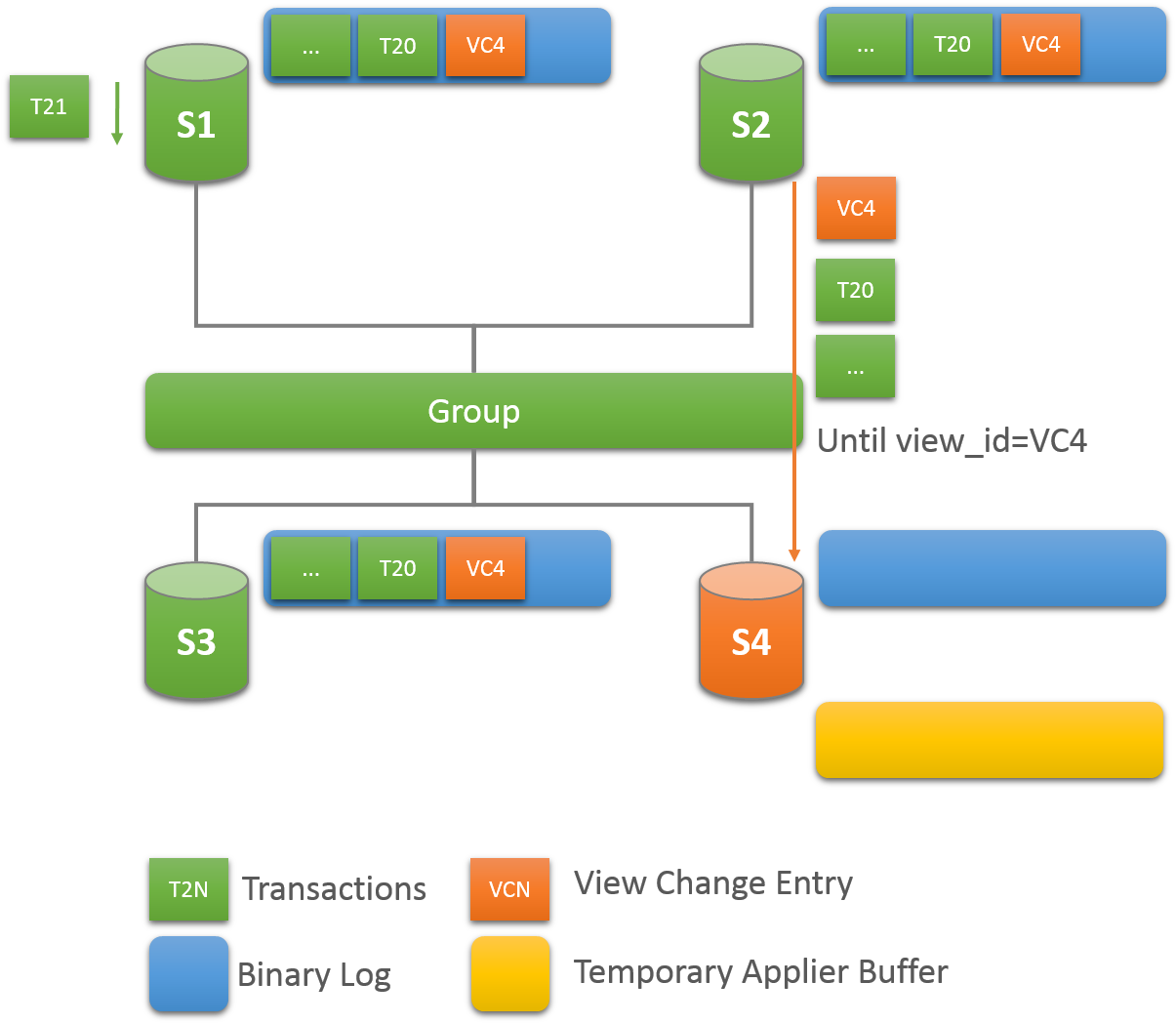

Whenever a new member joins a replication group, it connects to a suitable donor and fetches the data that it has missed up until the point it is declared online. This critical component in Group Replication is fault tolerant and configurable. The following section explains how recovery works and how to tune the settings

A random donor is selected from the existing online members in the group. This way there is a good chance that the same server is not selected more than once when multiple members enter the group.

If the connection to the selected donor fails, a new connection is automatically attempted to a new candidate donor. Once the connection retry limit is reached the recovery procedure terminates with an error.

A donor is picked randomly from the list of online members in the current view.

The other main point of concern in recovery as a whole is to make sure that it copes with failures. Hence, Group Replication provides robust error detection mechanisms. In earlier versions of Group Replication, when reaching out to a donor, recovery could only detect connection errors due to authentication issues or some other problem. The reaction to such problematic scenarios was to switch over to a new donor thus a new connection attempt was made to a different member.

This behavior was extended to also cover other failure scenarios:

Purged data scenarios - If the selected donor contains some purged data that is needed for the recovery process then an error occurs. Recovery detects this error and a new donor is selected.

Duplicated data - If a joiner already contains some data that conflicts with the data coming from the selected donor during recovery then an error occurs. This could be caused by some errant transactions present in the joiner.

One could argue that recovery should fail instead of switching over to another donor, but in heterogeneous groups there is chance that other members share the conflicting transactions and others do not. For that reason, upon error, recovery selects another donor from the group.

Other errors - If any of the recovery threads fail (receiver or applier threads fail) then an error occurs and recovery switches over to a new donor.

In case of some persistent failures or even transient failures recovery automatically retries connecting to the same or a new donor.

The recovery data transfer relies on the binary log and existing MySQL replication framework, therefore it is possible that some transient errors could cause errors in the receiver or applier threads. In such cases, the donor switch over process has retry functionality, similar to that found in regular replication.

The number of attempts a joiner makes when trying to connect to

a donor from the pool of donors is 10. This is configured

through the

group_replication_recovery_retry_count

plugin variable . The following command sets the maximum number

of attempts to connect to a donor to 10.

SET GLOBAL group_replication_recovery_retry_count= 10;

Note that this accounts for the global number of attempts that the joiner makes connecting to each one of the suitable donors.

The

group_replication_recovery_reconnect_interval

plugin variable defines how much time the recovery process

should sleep between donor connection attempts. This variable

has its default set to 60 seconds and you can change this value

dynamically. The following command sets the recovery donor

connection retry interval to 120 seconds.

SET GLOBAL group_replication_recovery_reconnect_interval= 120;

Note, however, that recovery does not sleep after every donor

connection attempt. As the joiner is connecting to different

servers and not to the same one over and over again, it can

assume that the problem that affects server A maybe not affect

server B. As such, recovery suspends only when it has gone

through all the possible donors. Once the joiner has tried to

connect to all the suitable donors in the group and none

remains, the recovery process sleeps for the number of seconds

configured by the

group_replication_recovery_reconnect_interval

variable.

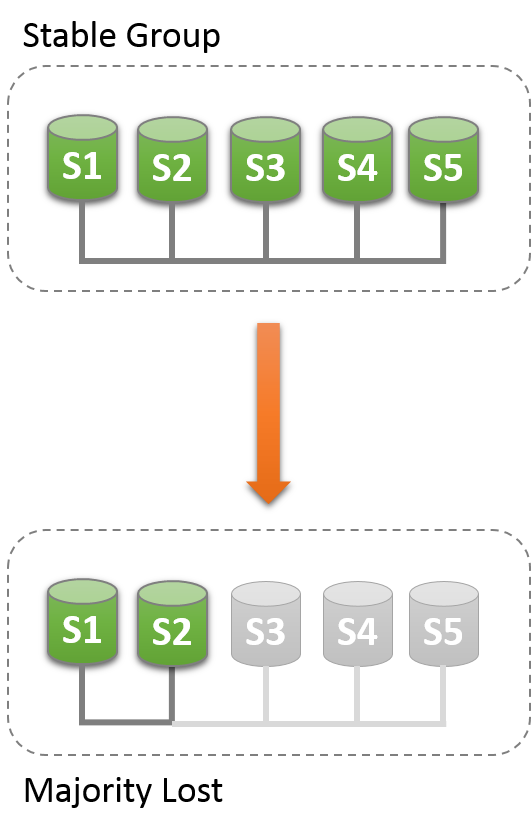

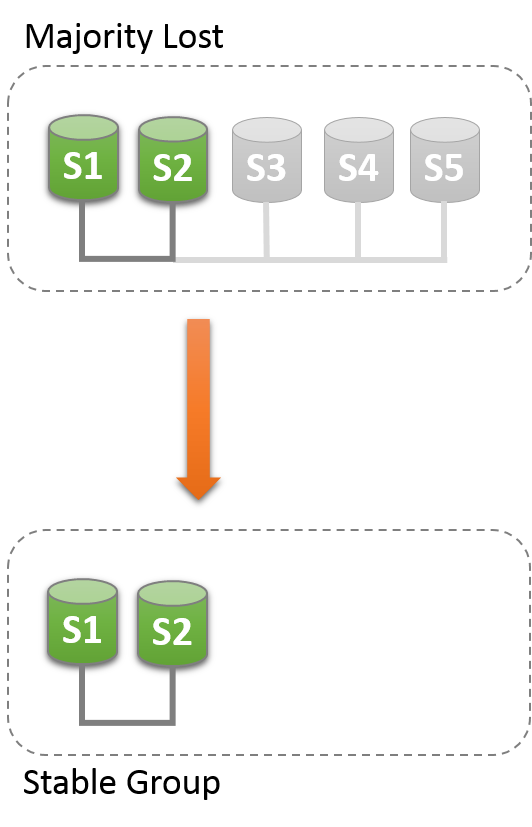

The group needs to achieve consensus whenever a change that needs to be replicated happens. This is the case for regular transactions but is also required for group membership changes and some internal messaging that keeps the group consistent. Consensus requires a majority of group members to agree on a given decision. When a majority of group members is lost, the group is unable to progress and blocks because it cannot secure majority or quorum.

Quorum may be lost when there are multiple involuntary failures, causing a majority of servers to be removed abruptly from the group. For example in a group of 5 servers, if 3 of them become silent at once, the majority is compromised and thus no quorum can be achieved. In fact, the remaining two are not able to tell if the other 3 servers have crashed or whether a network partition has isolated these 2 alone and therefore the group cannot be reconfigured automatically.

On the other hand, if servers exit the group voluntarily, they instruct the group that it should reconfigure itself. In practice, this means that a server that is leaving tells others that it is going away. This means that other members can reconfigure the group properly, the consistency of the membership is maintained and the majority is recalculated. For example, in the above scenario of 5 servers where 3 leave at once, if the 3 leaving servers warn the group that they are leaving, one by one, then the membership is able to adjust itself from 5 to 2, and at the same time, securing quorum while that happens.

Loss of quorum is by itself a side-effect of bad planning. Plan the group size for the number of expected failures (regardless whether they are consecutive, happen all at once or are sporadic).

The following sections explain what to do if the system partitions in such a way that no quorum is automatically achieved by the servers in the group.

The replication_group_members performance

schema table presents the status of each server in the current

view from the perspective of this server. The majority of the

time the system does not run into partitioning, and therefore

the table shows information that is consistent across all

servers in the group. In other words, the status of each server

on this table is agreed by all in the current view. However, if

there is network partitioning, and quorum is lost, then the

table shows the status UNREACHABLE for those

servers that it cannot contact. This information is exported by

the local failure detector built into Group Replication.

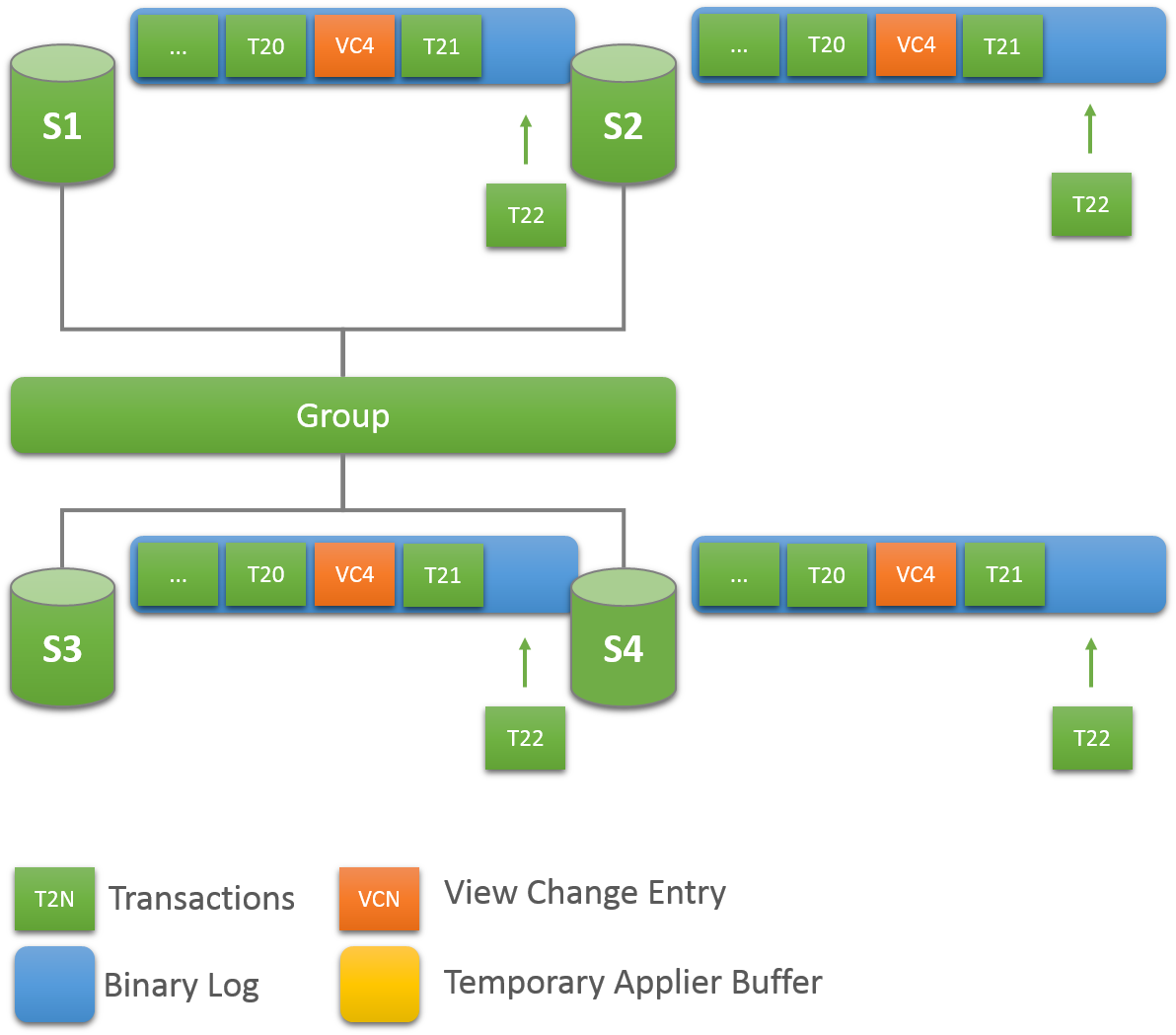

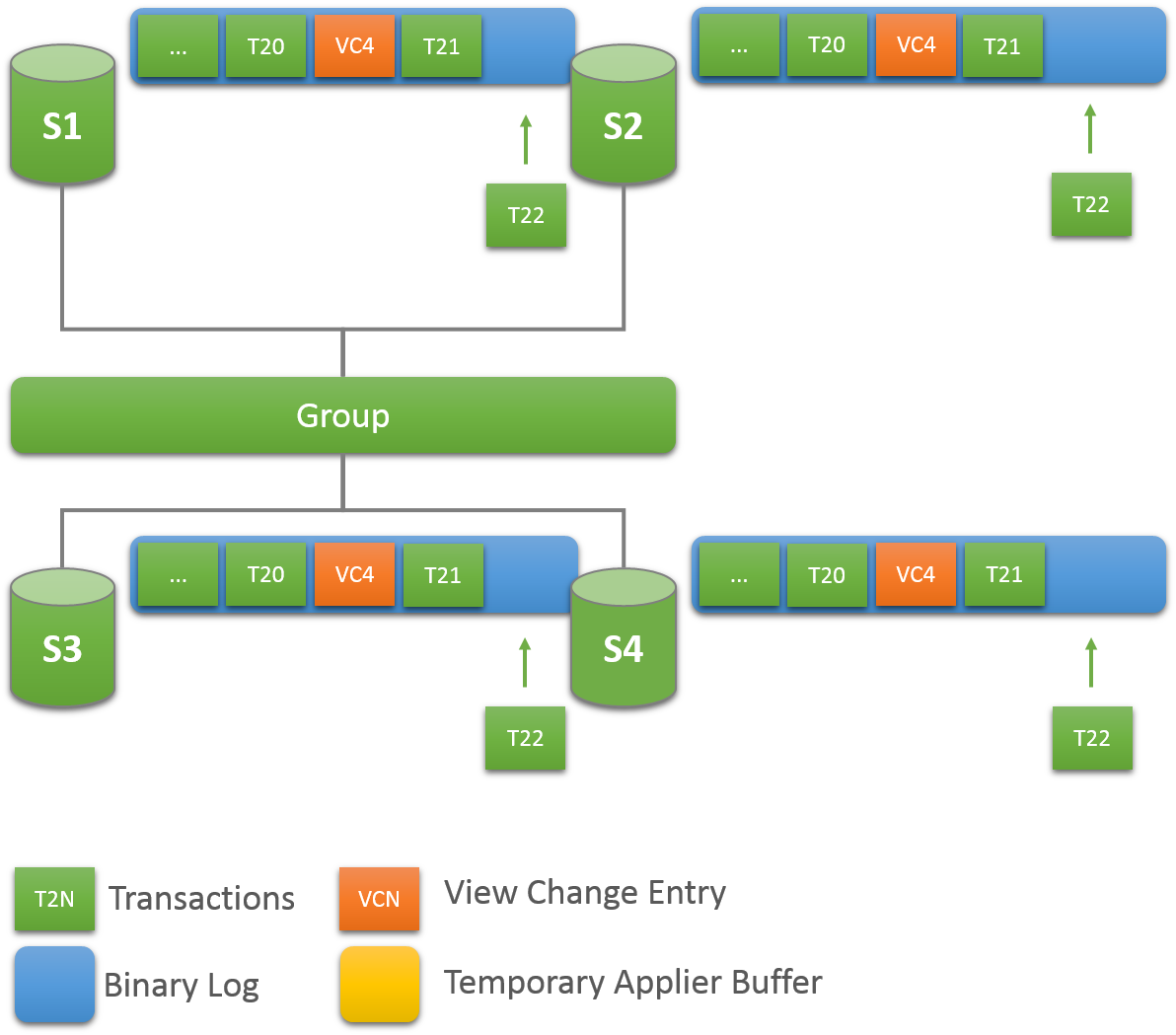

To understand this type of network partition the following section describes a scenario where there are initially 5 servers working together correctly, and the changes that then happen to the group once only 2 servers are online. The scenario is depicted in the figure.

As such, lets assume that there is a group with these 5 servers in it:

Server s1 with member identifier

199b2df7-4aaf-11e6-bb16-28b2bd168d07Server s2 with member identifier

199bb88e-4aaf-11e6-babe-28b2bd168d07Server s3 with member identifier

1999b9fb-4aaf-11e6-bb54-28b2bd168d07Server s4 with member identifier

19ab72fc-4aaf-11e6-bb51-28b2bd168d07Server s5 with member identifier

19b33846-4aaf-11e6-ba81-28b2bd168d07

Initially the group is running fine and the servers are happily

communicating with each other. You can verify this by logging

into s1 and looking at its

replication_group_members performance schema

table. For example:

mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 1999b9fb-4aaf-11e6-bb54-28b2bd168d07 | 127.0.0.1 | 13002 | ONLINE | | group_replication_applier | 199b2df7-4aaf-11e6-bb16-28b2bd168d07 | 127.0.0.1 | 13001 | ONLINE | | group_replication_applier | 199bb88e-4aaf-11e6-babe-28b2bd168d07 | 127.0.0.1 | 13000 | ONLINE | | group_replication_applier | 19ab72fc-4aaf-11e6-bb51-28b2bd168d07 | 127.0.0.1 | 13003 | ONLINE | | group_replication_applier | 19b33846-4aaf-11e6-ba81-28b2bd168d07 | 127.0.0.1 | 13004 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 5 rows in set (0,00 sec)

However, moments later there is a catastrophic failure and

servers s3, s4 and s5 stop unexpectedly. A few seconds after

this, looking again at the

replication_group_members table on s1 shows

that it is still online, but several others members are not. In

fact, as seen below they are marked as

UNREACHABLE. Moreover, the system could not

reconfigure itself to change the membership, because the

majority has been lost.

mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 1999b9fb-4aaf-11e6-bb54-28b2bd168d07 | 127.0.0.1 | 13002 | UNREACHABLE | | group_replication_applier | 199b2df7-4aaf-11e6-bb16-28b2bd168d07 | 127.0.0.1 | 13001 | ONLINE | | group_replication_applier | 199bb88e-4aaf-11e6-babe-28b2bd168d07 | 127.0.0.1 | 13000 | ONLINE | | group_replication_applier | 19ab72fc-4aaf-11e6-bb51-28b2bd168d07 | 127.0.0.1 | 13003 | UNREACHABLE | | group_replication_applier | 19b33846-4aaf-11e6-ba81-28b2bd168d07 | 127.0.0.1 | 13004 | UNREACHABLE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 5 rows in set (0,00 sec)

The table shows that s1 is now in a group that has no means of progressing without external intervention, because a majority of the servers are unreachable. In this particular case, the group membership list needs to be reset to allow the system to proceed, which is explained in this section. Alternatively, you could also choose to stop Group Replication on s1 and s2 (or stop completely s1 and s2), figure out what happened with s3, s4 and s5 and then restart Group Replication (or the servers).

Group replication enables you to reset the group membership list

by forcing a specific configuration. For instance in the case

above, where s1 and s2 are the only servers online, you could

chose to force a membership configuration consisting of only s1

and s2. This requires checking some information about s1 and s2

and then using the

group_replication_force_members

variable.

Suppose that you are back in the situation where s1 and s2 are the only servers left in the group. Servers s3, s4 and s5 have left the group unexpectedly. To make servers s1 and s2 continue, you want to force a membership configuration that contains only s1 and s2.

This procedure uses

group_replication_force_members

and should be considered a last resort remedy. It

must be used with extreme care and only

for overriding loss of quorum. If misused, it could create an

artificial split-brain scenario or block the entire system

altogether.

Recall that the system is blocked and the current configuration is the following (as perceived by the local failure detector on s1):

mysql> SELECT * FROM performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | 1999b9fb-4aaf-11e6-bb54-28b2bd168d07 | 127.0.0.1 | 13002 | UNREACHABLE | | group_replication_applier | 199b2df7-4aaf-11e6-bb16-28b2bd168d07 | 127.0.0.1 | 13001 | ONLINE | | group_replication_applier | 199bb88e-4aaf-11e6-babe-28b2bd168d07 | 127.0.0.1 | 13000 | ONLINE | | group_replication_applier | 19ab72fc-4aaf-11e6-bb51-28b2bd168d07 | 127.0.0.1 | 13003 | UNREACHABLE | | group_replication_applier | 19b33846-4aaf-11e6-ba81-28b2bd168d07 | 127.0.0.1 | 13004 | UNREACHABLE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 5 rows in set (0,00 sec)

The first thing to do is to check what is the peer address (group communication identifier) for s1 and s2. Log in to s1 and s2 and get that information as follows.

mysql> SELECT @@group_replication_local_address; +-----------------------------------+ | @@group_replication_local_address | +-----------------------------------+ | 127.0.0.1:10000 | +-----------------------------------+ 1 row in set (0,00 sec)

Then log in to s2 and do the same thing:

mysql> SELECT @@group_replication_local_address; +-----------------------------------+ | @@group_replication_local_address | +-----------------------------------+ | 127.0.0.1:10001 | +-----------------------------------+ 1 row in set (0,00 sec)

Once you know the group communication addresses of s1

(127.0.0.1:10000) and s2

(127.0.0.1:10001), you can use that on one of

the two servers to inject a new membership configuration, thus

overriding the existing one that has lost quorum. To do that on

s1:

mysql> SET GLOBAL group_replication_force_members="127.0.0.1:10000,127.0.0.1:10001"; Query OK, 0 rows affected (7,13 sec)

This unblocks the group by forcing a different configuration.

Check replication_group_members on both s1

and s2 to verify the group membership after this change. First

on s1.

mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | b5ffe505-4ab6-11e6-b04b-28b2bd168d07 | 127.0.0.1 | 13000 | ONLINE | | group_replication_applier | b60907e7-4ab6-11e6-afb7-28b2bd168d07 | 127.0.0.1 | 13001 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 2 rows in set (0,00 sec)

And then on s2.

mysql> select * from performance_schema.replication_group_members; +---------------------------+--------------------------------------+-------------+-------------+--------------+ | CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ | group_replication_applier | b5ffe505-4ab6-11e6-b04b-28b2bd168d07 | 127.0.0.1 | 13000 | ONLINE | | group_replication_applier | b60907e7-4ab6-11e6-afb7-28b2bd168d07 | 127.0.0.1 | 13001 | ONLINE | +---------------------------+--------------------------------------+-------------+-------------+--------------+ 2 rows in set (0,00 sec)

When forcing a new membership configuration, make sure that any servers are going to be forced out of the group are indeed stopped. In the scenario depicted above, if s3, s4 and s5 are not really unreachable but instead are online, they may have formed their own functional partition (they are 3 out of 5, hence they have the majority). In that case, forcing a group membership list with s1 and s2 could create an artificial split-brain situation. Therefore it is important before forcing a new membership configuration to ensure that the servers to be excluded are indeed shutdown and if they are not, shut them down before proceeding.

This section explains how to back up and subsequently restore a Group Replication member using MySQL Enterprise Backup; the same technique can be used to quickly add a new member to a group. Generally, backing up a Group Replication member is no different to backing up a stand-alone MySQL instance. The recommended process is to use MySQL Enterprise Backup image backups and a subsequent restore, for more information see Backup Operations.

The required steps can be summarized as:

Use MySQL Enterprise Backup to create a backup of the source server instance with simple timestamps.

Copy the backup to the destination server instance.

Use MySQL Enterprise Backup to restore the backup to the destination server instance.

The following procedure demonstrates this process. Consider the following group:

mysql> SELECT * member_host, member_port, member_state FROM performance_schema.replication_group_members;

+-------------+-------------+--------------+

| member_host | member_port | member_state |

+-------------+-------------+--------------+

| node1 | 3306 | ONLINE |

| node2 | 3306 | ONLINE |

| node3 | 3306 | ONLINE |

+-------------+-------------+--------------+

In this example the group is operating in single-primary mode and

the primary group member is node1. This means

that node2 and node3 are

secondaries, operating in read-only mode

(super_read_only=ON). Using

MySQL Enterprise Backup, a recent backup has been taken of node2

by issuing:

mysqlbackup --defaults-file=/etc/my.cnf --backup-image=/backups/my.mbi_`date +%d%m_%H%M` /

--backup-dir=/backups/backup_'date +%d%m_%H%M' --user=root -pmYsecr3t /

--host=127.0.0.1 --no-history-logging backup-to-image

The --no-history-logging option is used because

node2 is a secondary, and because it is

read-only MySQL Enterprise Backup cannot write status and metadata tables to the

instance.

Assume that the primary member, node1,

encounters irreconcilable corruption. After a period of time the

server instance is rebuilt but all the data on the member was

lost. The most recent backup of member node2

can be used to rebuild node1. This requires

copying the backup files from node2 to

node1 and then using MySQL Enterprise Backup to restore the

backup to node1. The exact way you copy the

backup files depends on the operating system and tools available

to you. In this example we assume Linux servers and use SCP to

copy the files between servers:

node2/backups # scp my.mbi_2206_1429 node1:/backups

Connect to the destination server, in this case

node1, and restore the backup using MySQL Enterprise Backup by

issuing:

mysqlbackup-4.1 --defaults-file=/etc/my.cnf --backup-image=/backups/`ls -1rt /backups|tail /

-n 1` --backup-dir=/tmp/restore_`date +%d%m_%H%M` copy-back-and-apply-logThe backup is restored to the destination server.

If your group is using multi-primary mode, extra care must be taken to prevent writes to the database during the MySQL Enterprise Backup restore stage and the Group Replication recovery phase. Depending on how the group is accessed by clients, there is a possibility of DML being executed on the newly joined instance the moment it is accessible on the network, even prior to applying the binary log before rejoining the group. To avoid this, configure the member's option file with:

group_replication_start_on_boot=OFF super_read_only=ON event_scheduler=OFF

This ensures that Group Replication is not started on boot, that the member defaults to read-only and that the event_scheduler is turned off while the member catches up with the group during the recovery phase. Adequate error handling must be configured on the clients to recognise that they are, temporarily, prevented from performing DML during this period.